Table of Contents

Overview of Multi-Clustering

- Multi-Clustering allows running several slurm clusters from the same control node.

- In this case, different slurmctld daemons will be running on the same machine, and the system users can target commands to any (or all) of the clusters.

- From an architecture standpoint, each cluster still relies on its own Slurm controller.

- From an end-user standpoint, they will be able to submit jobs that will not only be able to run on their local Slurm cluster but also on extra resources provided by the additional Slurm clusters.

Multi-Cluster Slurm Operation

- Multi-cluster slurm operation is designed to offer the ability to submit a job that can be run on any of the available clusters instead of the local one and receive status from those remote clusters.

[...]# sacctmgr list cluster Cluster ControlHost ControlPort RPC Share GrpJobs GrpTRES GrpSubmit MaxJobs MaxTRES MaxSubmit MaxWall QOS Def QOS ------------------------- ------------ ----- --------- ------- ------------- --------- ------- ------------- --------- ----------- ------ vsc4 X.X.X.X XX XX 1 normal vsc5 X.X.X.X XX XX 1 normal

Basic Multi-Cluster Slurm commands

Slurm client commands offer the “-M, –clusters=” option to communicate to a list of clusters. The third parameter after “-M” or “–clusters=” in the Slurm call is the cluster name (or a list of possible clusters)

[…]$ sinfo -M vsc4,vsc5to see which jobs are running on the two clusters (vsc4, vsc5)

[...]# sinfo -M vsc4,vsc5 CLUSTER: vsc4 PARTITION AVAIL TIMELIMIT NODES STATE NODELIST jupyter up infinite 3 idle n4905-025,n4906-020,n4912-072 skylake_0768 up infinite 9 alloc n4911-[011-012,023-024,035-036,047-048,060] . . . CLUSTER: vsc5 PARTITION AVAIL TIMELIMIT NODES STATE NODELIST cascadelake_0384 up infinite 5 unk* n452-[001,003-004,007-008] zen3_2048 up infinite 9 down* n3511-[011-013,015-020] . . .

[…]$ squeue -M vsc4,vsc5to see which jobs are running on the two clusters, the current list of submitted jobs, their state, and resource allocation. [doku:slurm_job_reason_codes|Here]] is a description of the most important job reason codes returned by the squeue command.

[...]# squeue -M vsc4,vsc5'

CLUSTER: vsc4

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

178418 skylake_0 V_0.2_U_ nobody PD 0:00 1 n4905-025,n4906-020

178420 skylake_0 V_0.3_U_ nobody PD 0:00 1 n4905-025,n4906-020

.

.

.

CLUSTER: vsc5

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

...

[…]$ scontrol -M vscdev/vscdev2is used to view SLURM configuration including: job, job step, node, partition, reservation, and overall system configuration. Without a command entered on the execute line, scontrol operates in an interactive mode and prompt for input. With a command entered on the execute line, scontrol executes that command and terminates. Unlikesinfoandsqueueonly one cluster can be used at a time withscontrol

[...]# scontrol -M vsc4 show job 178418

Multi-Cluster job submission

Assume a submission script job.sh

[username@node ~]$ sbatch job.sh

Or

[username@node ~]$ sbatch -M vsc4,vsc5 job.sh

To submit a job to a list of possible clusters and have the job submitted to the cluster that could run the job the soonest. Of course, to achieve that, the authentication between clusters must be operational.

[username@node ~]$ sbatch -M vsc4/vsc5 job.sh

To submit a job to a specific cluster (here vsc4 or vsc5)

Federated Slurm

The Federation is based on the multi-cluster slurm implementation but provides one aggregated system from the independent Slurm clusters and works in a peer-to-peer way In federation a job is submitted to the local cluster (on which the command is invoked) and is then replicated across the clusters in the federation. Each cluster then independently attempts to the schedule the job based off of its own scheduling policies. The clusters coordinate with the “origin” cluster (cluster the job was submitted to) to schedule the job.

- Federation is used to unify the job ID and scheduling information among all clusters in the federation

- Multiple, independent clusters can be used as one global resource

- Slurmdbd pushes updates to all clusters in the federation

- A cluster can only be part of one federation at a time

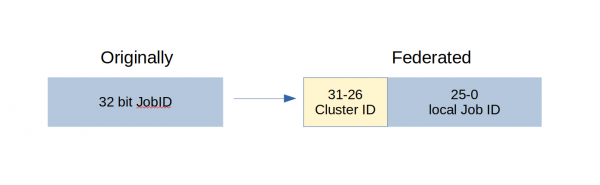

- Embed cluster ID within the originally 32-bit job ID

[...]# sacctmgr list cluster withfed Cluster ControlHost ControlPort RPC Share GrpSubmit MaxJobs MaxTRES MaxSubmit MaxWall QOS Def QOS Federation ID Features FedState ---------- --------------- ------------ ----- -------- ------- ------------- ------- ------- ------------- -------- ----------- -------------------- vsc4 X.X.X.X X X 1 normal Vscdev_fed 1 synced:yes ACTIVE vsc5 X.X.X.X X X 1 normal vscdev_fed 2 synced:yes ACTIVE

Federation Job Submission

When a federated cluster receives a job submission, it will submit copies of the job (sibling jobs) to each eligible cluster. Each cluster will then independently attempt to schedule the job. Once a sibling job is started, the origin cluster revokes the remaining sibling jobs.

The job submission is managed the same way as for the multi-cluster solution and the new –cluster-constraint=[!]<constraint_list> option allowing to use only cluster(s) in the federation that match some specific constraints.

[...]# squeue -M vscdev,vscdev2

CLUSTER: vsc4

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

67109080 skylake_0 V_0.3_U_ nobody PD 0:05 3 n4905-025,n4906-020

CLUSTER: vsc5

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

134217981 zen3_2048 nobody PD 0:25 8 n3511-[011-013,015-020]

[root@node]# scontrol show fed --sibling job Federation: vscdev_fed Self: vsc4:X.X.X.X:X ID:2 FedState:ACTIVE Features:synced:yes Sibling: vsc5:X.X.X.X:X ID:1 FedState:ACTIVE Features:synced:yes PersistConnSend/Recv:Yes/Yes Synced:Yes

Multi-Cluster vs Federation implementation

On a basic approach, multi-cluster is one unique interface to submit jobs to multiple separated Slurm clusters and the Slurm database can be unique or can be dedicated to each Slurm cluster while federation is a way to federate the job and scheduling information as one and the Slurm database must be unique.