Table of Contents

Storage infrastructure

- Article written by Siegfried Reinwald (VSC Team) <html><br></html>(last update 2019-01-15 by sh).

Storage hardware VSC-3

- Storage on VSC-3

- 10 Servers for

$HOME - 8 Servers for

$GLOBAL - 16 Servers for

$BINFS/$BINFL - ~ 800 spinning disks

- ~ 100 SSDs

Storage targets

- Several Storage Targets on VSC-3

$HOME$TMPDIR$SCRATCH$GLOBAL$BINFS$BINFL

- For different purposes

- Random I/O

- Small Files

- Huge Files / Streaming Data

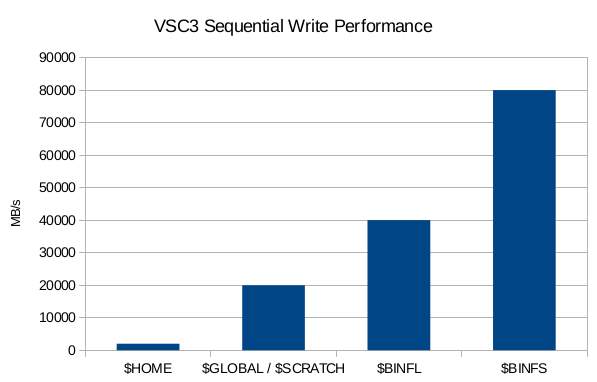

Storage performance

The HOME Filesystem (VSC-3)

- Use for non I/O intensive jobs

- Basically NFS Exports over infiniband (no RDMA)

- Logical volumes of projects are distributed among the servers

- Each logical volume belongs to 1 NFS server

- Accessible with the

$HOMEenvironment variable- /home/lv70XXX/username

The GLOBAL and SCRATCH filesystem

- Use for I/O intensive jobs

- ~ 500 TB Space (default quota is 500GB/project)

- Can be increased on request (subject to availability)

- BeeGFS Filesystem

- Accessible via the

$GLOBALand$SCRATCHenvironment variables$GLOBAL… /global/lv70XXX/username$SCRATCH… /scratch

- Check quota

beegfs-ctl --getquota --cfgFile=/etc/beegfs/global3.d/beegfs-client.conf --gid 70XXX

VSC-3 > beegfs-ctl --getquota --cfgFile=/etc/beegfs/global3.d/beegfs-client.conf --gid 70824

user/group || size || chunk files

name | id || used | hard || used | hard

--------------|------||------------|------------||---------|---------

p70824| 70824|| 0 Byte| 500.00 GiB|| 0| 100000

The BINFL filesystem

- Specifically designed for Bioinformatics applications

- Use for I/O intensive jobs

- ~ 1 PB Space (default quota is 10GB/project)

- Can be increased on request (subject to availability)

- BeeGFS Filesystem

- Accessible via

$BINFLenvironment variable$BINFL… /binfl/lv70XXX/username

- Also available on VSC-4

- Check quota

beegfs-ctl --getquota --cfgFile=/etc/beegfs/hdd_storage.d/beegfs-client.conf --gid 70XXX

VSC-3 > beegfs-ctl --getquota --cfgFile=/etc/beegfs/hdd_storage.d/beegfs-client.conf --gid 70824

user/group || size || chunk files

name | id || used | hard || used | hard

--------------|------||------------|------------||---------|---------

p70824| 70824|| 5.93 MiB| 10.00 GiB|| 574| 1000000

The BINFS filesystem

- Specifically designed for Bioinformatics applications

- Use for very I/O intensive jobs

- ~ 100 TB Space (default quota is 2GB/project)

- Can be increased on request (subject to availability)

- BeeGFS Filesystem

- Accessible via

$BINFSenvironment variable$BINFS… /binfs/lv70XXX/username

- Also available on VSC-4

- Check quota

beegfs-ctl --getquota --cfgFile=/etc/beegfs/nvme_storage.d/beegfs-client.conf --gid 70XXX

VSC-3 > beegfs-ctl --getquota --cfgFile=/etc/beegfs/nvme_storage.d/beegfs-client.conf --gid 70824

user/group || size || chunk files

name | id || used | hard || used | hard

--------------|------||------------|------------||---------|---------

p70824| 70824|| 0 Byte| 2.00 GiB|| 0| 2000

The TMP filesystem

- Use for

- Random I/O

- Many small files

- Size is up to 50% of main memory

- Data gets deleted after the job

- Write Results to

$HOMEor$GLOBAL

- Disadvantages

- Space is consumed from main memory <html><!–* Alternatively the mmap() system call can be used

- Keep in mind, that mmap() uses lazy loading

- Very small files waste main memory (memory mapped files are aligned to page-size)–></html>

- Accessible with the

$TMPDIRenvironment variable

Storage hardware VSC-4

- Storage on VSC-4

- 1 Server for

$HOME - 6 Servers for

$DATA - 720 spinning disks

- 16 NVMEs flash drives

The HOME Filesystem (VSC-4)

- Use for software and job scripts

- Default quota: 100GB

- Accessible with the

$HOMEenvironment variable (VSC-4)- /home/fs70XXX/username

- Also available on VSC-3

- /gpfs/home/fs70XXX/username

- Check quota

mmlsquota --block-size auto -j home_fs70XXX home

VSC-4 > mmlsquota --block-size auto -j home_fs70824 home

Block Limits | File Limits

Filesystem type blocks quota limit in_doubt grace | files quota limit in_doubt

home FILESET 63.7M 100G 100G 0 none | 3822 1000000 1000000 0

The DATA Filesystem

- Use for all kind of I/O

- Default quota: 10TB

- Extansion can be requested

- Accessible with the

$DATAenvironment variable (VSC-4)- /data/fs70XXX/username

- Also available on VSC-3

- /gpfs/data/fs70XXX/username

- Check quota

mmlsquota --block-size auto -j data_fs70XXX data

VSC-4 > mmlsquota --block-size auto -j data_fs70824 data

Block Limits | File Limits

Filesystem type blocks quota limit in_doubt grace | files quota limit in_doubt

data FILESET 0 9.766T 9.766T 0 none | 14 1000000 1000000 0

Backup policy

- Backup of user files is solely the responsibility of each user

- Backed up filesystems:

$HOME(VSC-3)$HOME(VSC-4)$DATA(VSC-4)

- Backups are performed on best effort basis

- Full backup run: ~3 days

- Backups are used for disaster recovery only

- Project manager can exclude $DATA filesystem from backup

Storage exercises

In these exercises we try to measure the performance of the different storage targets on VSC-3. For that we will use the “IOR” application (https://github.com/LLNL/ior) which is a standard benchmark for distributed storage systems.

“IOR” for these exercises has been built with gcc-4.9 and openmpi-1.10.2 so load these 2 modules first:

module purge module load gcc/4.9 openmpi/1.10.2

Now extract the storage exercises to your own Folder.

mkdir my_directory_name cd my_directory_name cp -r ~training/examples/08_storage_infrastructure/*Benchmark ./

Keep in mind that the results will vary, because there are other users working on the storage targets.

Exercise 1 - Sequential I/O

We will now measure the sequential performance of the different storage targets on VSC-3.

- With one process

cd 01_SequentialStorageBenchmark # Submit the job sbatch 01a_one_process_per_target.slrm # Inspect corresponding slurm-*.out files

<HTML><ol start=“2” style=“list-style-type: lower-alpha;”></HTML> <HTML><li></HTML>With 8 processes<HTML></li></HTML><HTML></ol></HTML>

# Submit the job sbatch 01b_eight_processes_per_target.slrm # Inspect corresponding slurm-*.out files

Take your time and compare the outputs of the 2 different runs. What conclusions can be drawn for the storage targets on VSC-3?

Exercise 1 - Sequential I/O performance discussion

Discuss the following questions with your partner:

- The performance of which storage targets improves with the number of processes? Why?

- What could you do to further improve the performance of the sequential write throughput? What could be a problem with that?

- Bonus Question:

$TMPDIRseems to scale pretty well with the number of processes although it is an in-memory filesystem. Why is that happening?

Exercise 1 - Sequential I/O performance discussion

Exercise 1a:

HOME: Max Write: 237.37 MiB/sec (248.91 MB/sec) GLOBAL: Max Write: 925.64 MiB/sec (970.60 MB/sec) BINFL: Max Write: 1859.69 MiB/sec (1950.03 MB/sec) BINFS: Max Write: 1065.61 MiB/sec (1117.37 MB/sec) TMP: Max Write: 2414.70 MiB/sec (2531.99 MB/sec)

Exercise 1b:

HOME: Max Write: 371.76 MiB/sec (389.82 MB/sec) GLOBAL: Max Write: 2195.28 MiB/sec (2301.91 MB/sec) BINFL: Max Write: 2895.24 MiB/sec (3035.88 MB/sec) BINFS: Max Write: 2950.23 MiB/sec (3093.54 MB/sec) TMP: Max Write: 16764.76 MiB/sec (17579.12 MB/sec)

Exercise 2 - Random I/O

We will now measure the storage performance for tiny 4kilobyte random writes.

- With one process

cd 02_RandomioStorageBenchmark # Submit the job sbatch 02a_one_process_per_target.slrm # Inspect corresponding slurm-*.out files

<HTML><ol start=“2” style=“list-style-type: lower-alpha;”></HTML> <HTML><li></HTML>With 8 processes<HTML></li></HTML><HTML></ol></HTML>

# Submit the job sbatch 02b_eight_processes_per_target.slrm # Inspect corresponding slurm-*.out files

Take your time and compare the outputs of the 2 different runs. Do additional processes speed up the I/O activity?

Now compare your Results to the sequential run in exercise 1. What can be concluded for random I/O versus sequential I/O on the VSC-3 storage targets?

Exercise 2 - Random I/O performance discussion

Discuss the following questions with your partner:

- Which storage targets on VSC-3 are especially suited for doing random I/O

- Which storage targets should never be used for random I/O

- You have a program that needs 32Gigabytes of RAM and does heavy random I/O on a 10 Gigabyte file which is stored on

$GLOBAL. How could you speed up your application? - Bonus Question: Why are SSDs so much faster than traditional disks, when it comes to random I/O? (A modern datacenter SSD can deliver ~1000 times more IOPS than a traditional disk)

Exercise 2 - Random I/O performance discussion

Exercise 2a:

HOME: Max Write: 216.06 MiB/sec (226.56 MB/sec) GLOBAL: Max Write: 56.13 MiB/sec (58.86 MB/sec) BINFL: Max Write: 42.70 MiB/sec (44.77 MB/sec) BINFS: Max Write: 41.39 MiB/sec (43.40 MB/sec) TMP: Max Write: 1428.41 MiB/sec (1497.80 MB/sec)

Exercise 2b:

HOME: Max Write: 249.11 MiB/sec (261.21 MB/sec) GLOBAL: Max Write: 235.51 MiB/sec (246.95 MB/sec) BINFL: Max Write: 414.46 MiB/sec (434.59 MB/sec) BINFS: Max Write: 431.59 MiB/sec (452.55 MB/sec) TMP: Max Write: 10551.71 MiB/sec (11064.27 MB/sec)