Table of Contents

Singularity

- Article written by Jan Zabloudil (VSC Team) <html><br></html>(last update 2020-09-21 by jz).

Singularity

Singularity is a container solution created by necessity for scientific and application driven workloads.

Singularity supports existing and traditional HPC resources.

Singularity natively supports InfiniBand, Lustre, and works seamlessly with all resource managers (e.g. SLURM, SGE, etc.) because it works like running any other command on the system.

Singularity has built-in support for MPI and for containers that need to leverage GPU resources.

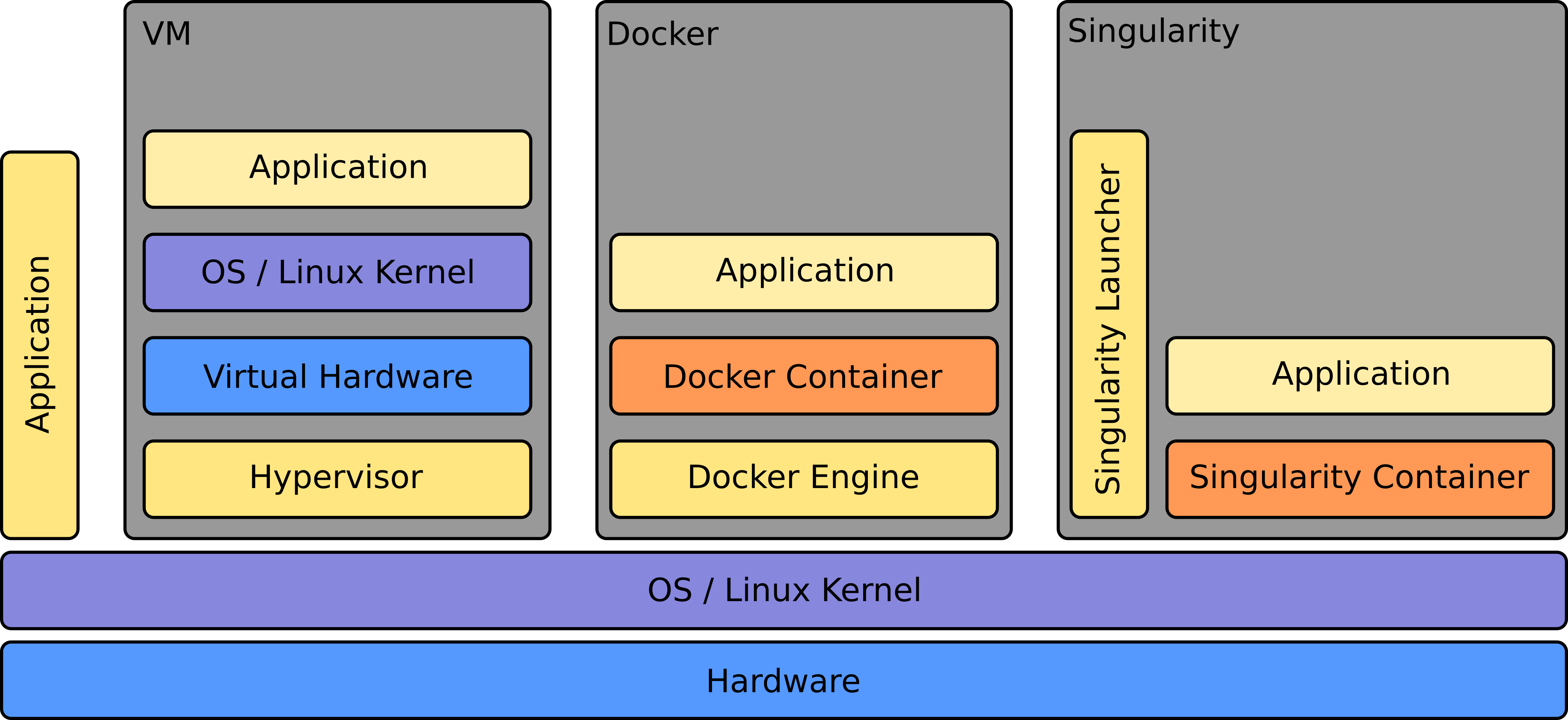

Virtualization vs. Containerization

Singularity - basic example

$ cat hello.py

import sys

print("Hello World: The Python version is %s.%s.%s" % sys.version_info[:3])

VSC-3 > module load go/1.11 singularity/3.4.1 VSC-4 > spack load singularity %gcc@9.1.0 VSC-4 > module load singularity/3.5.2-gcc-9.1.0-fp2564h

$ singularity exec docker://python:latest /usr/local/bin/python ./hello.py Hello World: The Python version is 3.8.5

Singularity - basic job script examples on VSC

VSC-3:

#!/bin/bash # #SBATCH -J myjob #SBATCH -o output.%j #SBATCH -p mem_0064 #SBATCH -N 1 module load go/1.11 singularity/3.4.1 singularity exec docker://python:latest /usr/local/bin/python ./hello.py

VSC-4:

#!/bin/bash # #SBATCH -J myjob #SBATCH -o output.%j #SBATCH -p mem_0096 #SBATCH -N 1 spack load singularity %gcc@9.1.0 singularity exec docker://python:latest /usr/local/bin/python ./hello.py

Singularity - use an existing SIF (“Singularity Image File”)

Singularity can be in particular useful for GPU computing

Advanced example: PyTorch (interactive)

VSC-3 > module load go/1.11 singularity/3.4.1 cuda/9.1.85 VSC-4 > spack load singularity %gcc@9.1.0 VSC-4 > module load singularity/3.5.2-gcc-9.1.0-fp2564h export PATH=$PATH:/usr/sbin

singularity pull docker://anibali/pytorch:1.5.0-cuda9.2-ubuntu18.04

salloc -N1 -p gpu_gtx1080single ssh n372-001 module load go/1.11 singularity/3.4.1 cuda/9.1.85 cd /path/to/container

singularity shell --nv --bind /opt/sw pytorch_1.5.0-cuda9.2-ubuntu18.04.sif

--nv ... load nvidia driver --bind ... mount this directory in my image

Singularity pytorch_1.5.0-cuda9.2-ubuntu18.04.sif:~/Singularity/Container> python3

Python 3.8.1 (default, Jan 8 2020, 22:29:32)

[GCC 7.3.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.cuda.is_available()

True

>>> device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

>>> print(device)

cuda:0

>>>

Singularity - create SIF from a singularity recipe

Example: Singularity definition file (“simple-example.def”) with python 3.7 and numpy:

Bootstrap: docker From: nvidia/cuda:9.2-cudnn7-runtime-ubuntu18.04 %post # set up python 3.7 apt-get update apt-get install -y software-properties-common add-apt-repository ppa:deadsnakes/ppa apt-get update apt-get install -y python3.7 update-alternatives --install /usr/bin/python3 python /usr/bin/python3.7 1 update-alternatives --set python /usr/bin/python3.7 # set up pip apt-get install -y curl curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py python3 get-pip.py # install numpy pip install numpy

The container has to be built on a workstation with sudo or root permissions:

singularity build ubuntu1804-numpy.sif simple-example.def

The SIF file can be copied to the cluster and can be used as described on the previous slides.

Singularity - create SIF from a singularity recipe

VSC-3 > module load go/1.11 singularity/3.4.1 cuda/9.1.85 VSC-4 > spack load singularity %gcc@9.1.0 VSC-4 > module load singularity/3.5.2-gcc-9.1.0-fp2564h

$ cat numpy-example.py

import numpy

x = numpy.add(41,1)

print("The answer is", x)

singularity exec ubuntu1804-numpy.sif python3 ./example-numpy.py

The result is 42