This version (2022/10/04 06:53) was approved by katrin.The Previously approved version (2014/10/24 12:34) is available.

This version (2022/10/04 06:53) was approved by katrin.The Previously approved version (2014/10/24 12:34) is available.

This is an old revision of the document!

OpenFOAM

OpenFOAM on VSC-4

check currently available openfoam modules/versions:

module avail openfoam*

load openfoam:

spack load -r openfoam-org@7 %intel@19.0.5.281 #load openfoam-org version 7 and additional packages module load intel/19.0.5 #loading compiler with spack not working, needs to be loaded separately

Example submission script:

#!/bin/sh #SBATCH -J sim #SBATCH -N 1 #SBATCH -A p71428 #SBATCH --qos p71428_0096 #SBATCH --tasks-per-node=48 module purge spack load -r openfoam-org@7 %intel@19.0.5.281 module load intel/19.0.5 EXE=`which foamExec` $EXE decomposePar -allRegions mpirun -np 48 $EXE chtMultiRegionFoam -parallel $EXE reconstructPar -newTimes -allRegions $EXE rm -r processor*

load openfoam source file to have environment variables and be able to compile custom code:

source $WM_PROJECT_DIR/etc/bashrc

ODE-Solvers within openFoam-org versions should be used with the gcc-compiler.

(old): OpenFOAM and local temp space on VSC-1

a simple jobscript for using the /work/tmp directory on VSC1 for openFOAM:

(old): OpenFOAM compile scripts

(old): INTEL icc and INTELMPI on VSC-1

in July 2013 VSC-1 has been updated to Scientific Linux 6.4 (identical with VSC-2). Also the latest Infiniband Software only includes Intel MPI. For installing OpenFoam on VSC-1 please use the installation Scripts from VSC-2

(old): INTEL icc and Qlogic MPI on VSC-1

Set the MPI Version to 'qlogicmpi_intel-0.1.0', logout and login again.

Download the source packages for OpenFoam and Thirdparties. Place them at the same directory as the scripts which are provided below.

(old) Version 1.7.1

Patched File: turbulentheatfluxtemperaturefvpatchscalarfield.c

Place at same directory as the script is executed.

(old) Version 2.1.1

(old): INTEL icc and Intel MPI on VSC-2

Set the MPI Version to 'intel_mpi_intel64-4.0.3.008' (1.7.1, 2.1.1) or 'impi_intel-4.1.0.024' (2.2.0), logout and login again.

Download the source packages for OpenFoam and Thirdparties. Place them at the same directory as the scripts which are provided below.

(old): Version 1.7.1

Patched File: turbulentheatfluxtemperaturefvpatchscalarfield.c

Place at same directory as the script is executed.

(old): Version 2.1.1

(old): Version 2.2.0

(old): Version 2.2.2

(old): Version 2.3.x

version using the latest git repository of openfoam:

intel mpi 4.1.0.024 installopenfoam_2.3.x_vsc2_git_intel_mpi.4.1.0.024.sh

intel mpi 4.1.3.045 installopenfoam_2.3.x_vsc2_git_intel_mpi.4.1.3.045.sh

(old): Version foam-ext-3.0

script may needed to be run twice, because git clone some how terminates the script …

(old): Version foam-ext-3.1

compilation succeeded except some mesh conversion tools

Scaling of OpenFOAM

Performance tests were done using different setups, depending on the used cluster:

VSC-1

- Intel C-compiler 11.1

- Qlogic MPI 0.1.0

VSC-2

- Intel C-compiler 12.1.3

- Intel MPI 4.0.3.008 / impi_intel-4.1.0.024

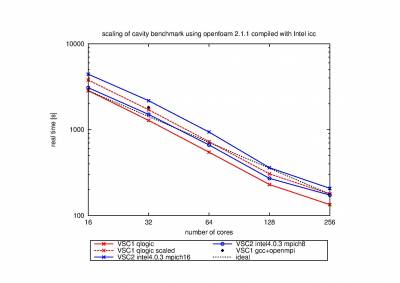

Compared to the gcc + openmpi Version a speed up of about 1/3 was found in case of 32 cores on VSC-1

Note: the runs denoted “VSC1 qlogic scaled” are the same as “VSC1 qlogic” but the runtimes were multiplied by a factor of 1.33 which is the nominal factor in clockspeed between VSC-1 and VSC-2

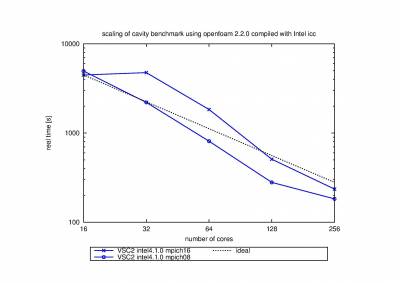

Cavity Benchmark for version 2.2.0

| cores | VSC-2 intel.4.1.0.024 mpich16 | VSC-2 intel.4.1.0.024 mpich8 |

|---|---|---|

| 16 | 4471 | 4949 |

| 32 | 4753 | 2202 |

| 64 | 1832 | 809 |

| 128 | 508 | 279 |

| 256 | 234 | 182 |

Cavity Benchmark for version 2.1.1

real times for benchmark in seconds:

- mpich16: 16 cores per node were used for calculation

- mpich8: 8 cores per node were used for calculation

| cores | VSC-1 qlogic | VSC-2 intel.4.0.3.008 mpich16 | VSC-2 intel.4.0.3.008 mpich8 | VSC-1 gcc + openmpi |

|---|---|---|---|---|

| 16 | 2843 | 4426 | 3083 | |

| 32 | 1282 | 2176 | 1504 | 1818 |

| 64 | 545 | 937 | 663 | |

| 128 | 229 | 360 | 270 | |

| 256 | 134 | 207 | 173 |

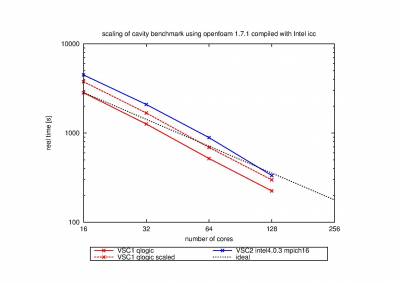

Cavity benchmark for version 1.7.1

real times for benchmark in seconds:

- mpich16: 16 cores per node were used for calculation

| cores | VSC-1 qlogic | VSC-2 intel.4.0.3.008 mpich16 |

|---|---|---|

| 16 | 2826 | 4473 |

| 32 | 1260 | 2086 |

| 64 | 517 | 887 |

| 128 | 224 | 333 |

| 256 | - | 225 |

FoamPro

was used; ask admins