This version (2022/06/20 09:01) was approved by msiegel.

This version (2022/06/20 09:01) was approved by msiegel.This is an old revision of the document!

VASP benchmarks

The following plots show the performance of the VASP code depending on the selected mpich environment in the grid engine, the number of processes and the number of threads (see also hybrid jobs, item Automatic modification through parallel environment).

A 5×4 supercell with 150 Palladium atoms and 24 Oxygen atoms, i.e., 3 pure Palladium layers with one mixed Palladium/Oxygen layer have been used for the following benchmark tests.

VSC 1

The code was compiled with the intel compiler, intel MKL (BLACS, SCALAPACK), and intel mpi (4.1.0.024).

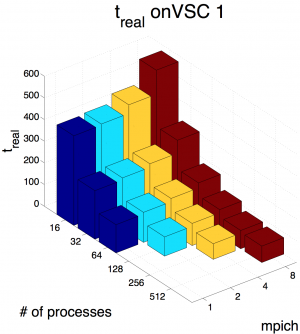

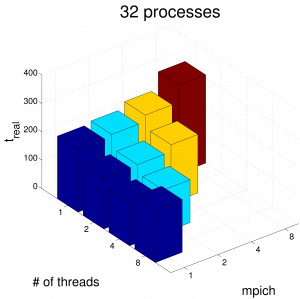

In Figure 1, the decrease in real time with growing number of processes can be observed. A lower mpich (number of processes per node) further reduces the running time. Considering also the number of threads, Figure 2 shows, that the running time can additionally be decreased by a using 2 or 4 threads instead of 1 thread, only. However, 8 threads increase the running time.

Figure 3 and 4 relate the running time to the core hours (= running time x number of slots). Quite obviously the core hours have their minimum for one process. Assume the fictive case of perfect scaling that an increase of processes by a factor of 2 leads to a decrease of running time by a factor of 2. Then the running time would exhibit an exponential decrease whereas the core hours would be constant. Due to overhead like communications, transfer etc., real scaling will never follow this behavior. Therefore the core hours increase with the number of slots.

It can be concluded that the real time can be reduced with increasing system size, however, at the price of unproportionally increasing core hours. This means that an increase in system size should be done within a region with essential gain in real time. Reductions in only some minutes at the cost of several core hours may be inefficient. A further benefit of not requesting too many slots is that the waiting time in the queue is reduced.

Mind that the specific behavior of a code can be very different from this example. Even the behavior of the same code may strongly depend on the input. Thus, it is recommended to test your specific application in order to find the optimum combination of 'mpich environment-number of processes-number of threads'.

Figure 1: real running time depending on the selected mpich environment and the number of processes. Here the number of threads is always 1.

Figure 2: real running time for 32 processes: the mpich environment and the number of threads is varied corresponding to Table 1.

| real time for 32 processes [s] | ||||

|---|---|---|---|---|

| mpich 1 | mpich 2 | mpich 4 | mpich 8 | |

| 1 thread | 220.5800 | 228.4900 | 251.0800 | 310.9900 |

| 2 threads | 178.7900 | 181.1600 | 205.7800 | |

| 4 threads | 150.4200 | 150.9300 | ||

| 8 threads | 185.6900 |

Table 1: real running time for 32 processes.

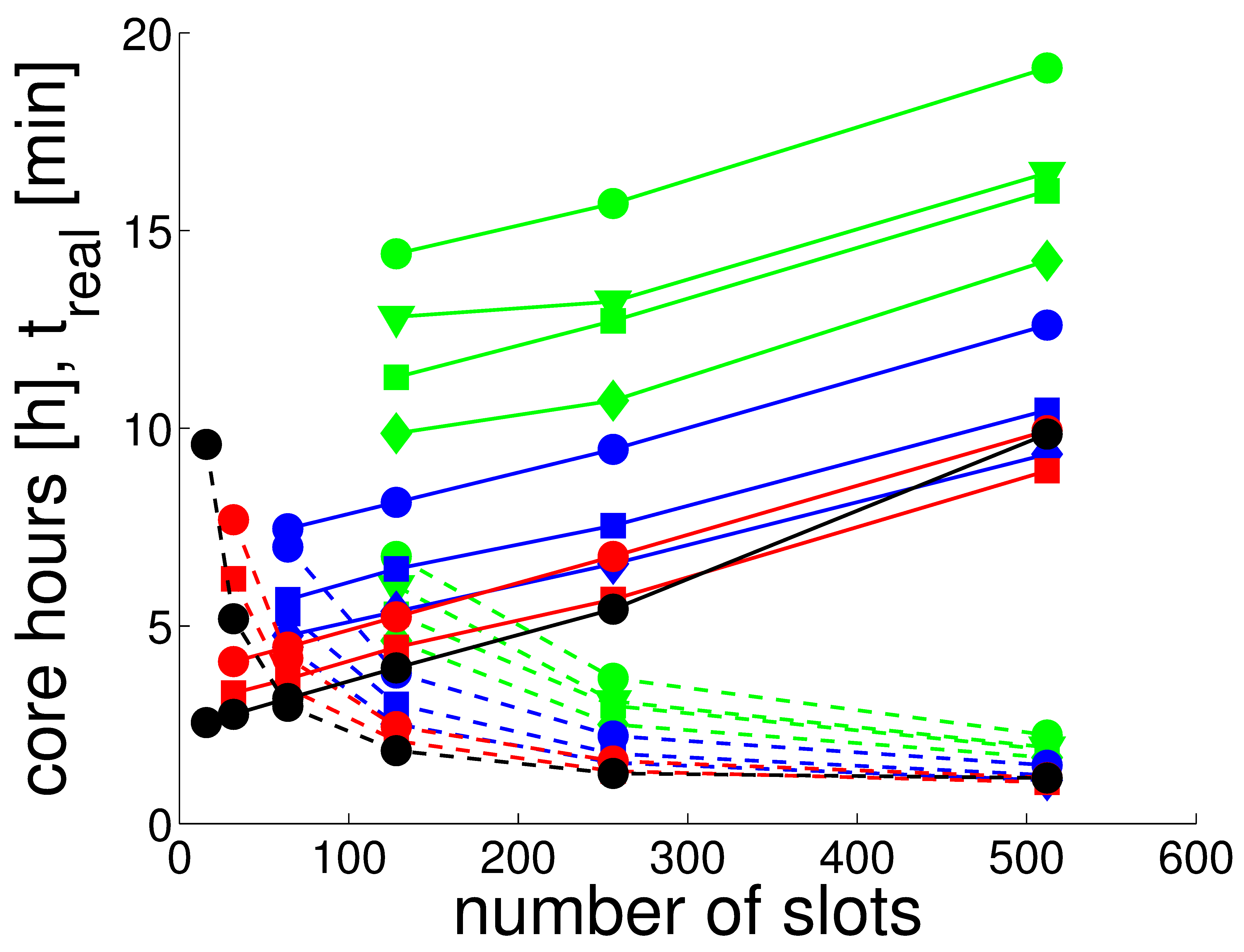

Figure 3: real running time (dashed lines [min]) and core hours (solid lines [h]) depending on the number of slots.

The colors denote calculations with the same mpich environment,

<html>

i.e., mpich/mpich8: black, <span style=“color:red;font-size:100%;”>mpich4</span>: red,<span style=“color: blue;font-size:100%;”>mpich2</span>: blue, and <span style=“color: green;font-size:100%;”>mpich1</span>: green.

</html>

The markers denote the number of threads, namely, 1 (circles), 2 (squares), 4 (diamonds), or 8 (down triangles) threads.

Figure 3: real running time (dashed lines [min]) and core hours (solid lines [h]) depending on the number of slots.

The colors denote calculations with the same mpich environment,

<html>

i.e., mpich/mpich8: black, <span style=“color:red;font-size:100%;”>mpich4</span>: red,<span style=“color: blue;font-size:100%;”>mpich2</span>: blue, and <span style=“color: green;font-size:100%;”>mpich1</span>: green.

</html>

The markers denote the number of threads, namely, 1 (circles), 2 (squares), 4 (diamonds), or 8 (down triangles) threads.

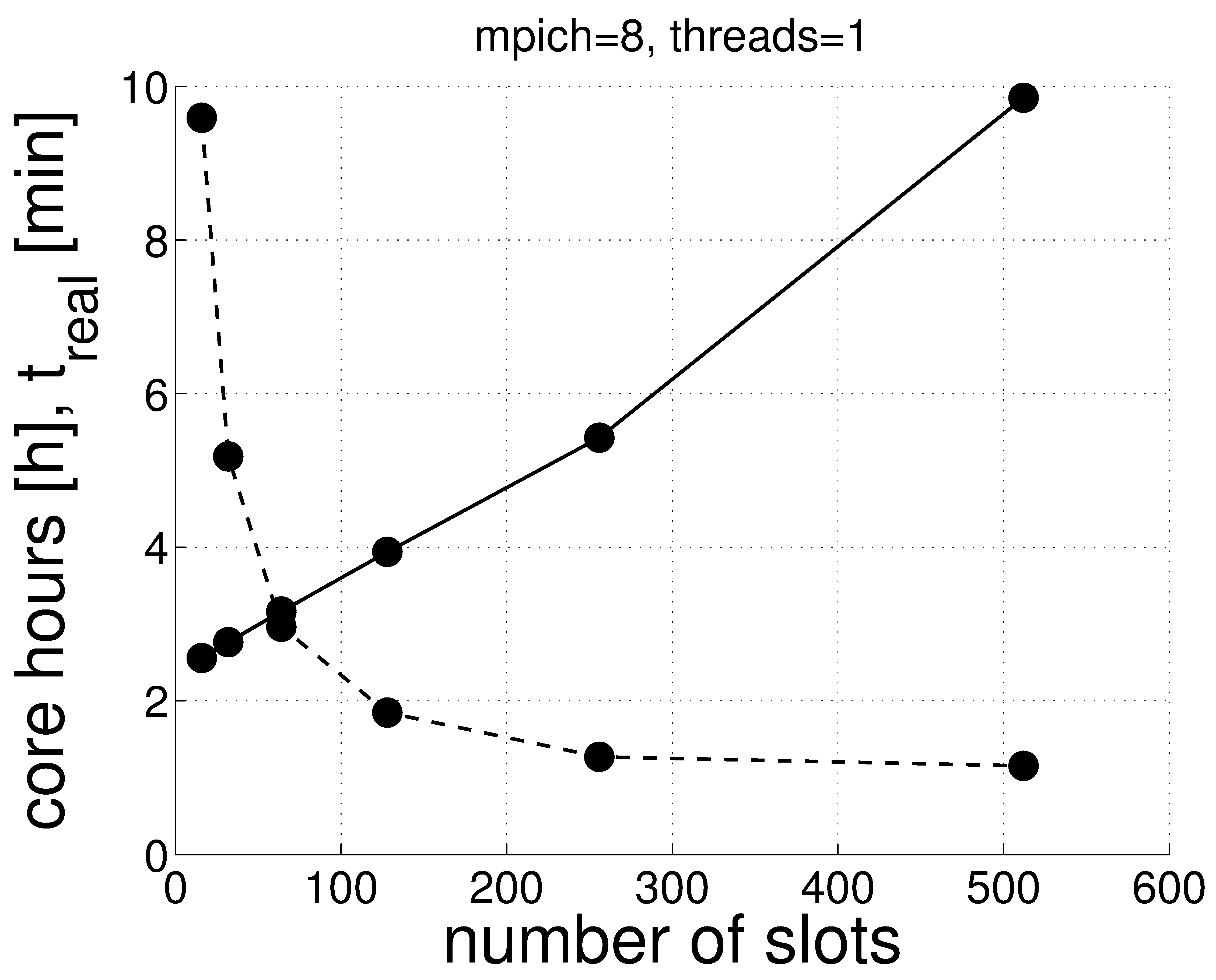

Figure 4: real running time (dashed lines [min]) and core hours (solid lines [h]) for the best result (mpich/1 thread).

Figure 4: real running time (dashed lines [min]) and core hours (solid lines [h]) for the best result (mpich/1 thread).

VSC 2

The code was compiled with the intel compiler, intel MKL (BLACS, SCALAPACK), and intel mpi (4.0.1.007).

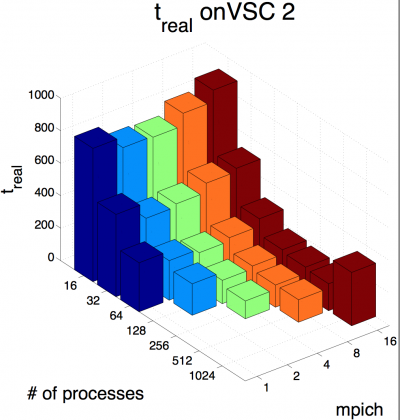

The figure shows the dependency of the computing time on the selected mpich environment and the number of processes. mpich1 means 1 process per node, mpich2 2 processes and so forth. For 16 processes, the environment variable is not following the same name convention, it is called mpich.

A trend can be noticed that the computing time decreases with decreasing number of processes per node (mpich) and increasing number of processes, also corresponding to an increase of the number of slots. However, when the number of processes further increases, the computing time rises. The reason is the not optimal scaling of BLACS and SCALAPACK. Improvement can be achieved by utilizing ELPA instead.

Figure 5: real running time depending on the selected mpich environment and the number of processes. Here the number of threads is always 1.

VSC 3

The code was compiled with the intel compiler, intel MKL (BLACS, SCALAPACK), and intel mpi (5.0.0.028).

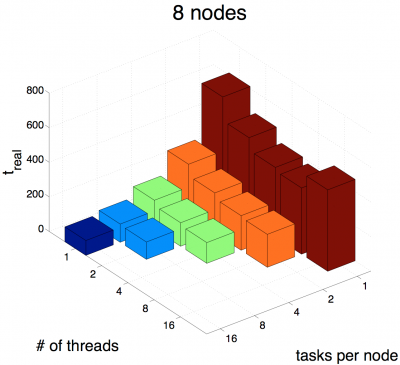

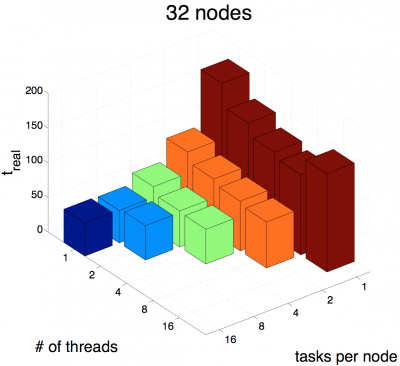

Figure 6 shows that the running time of this benchmark substantially decreases with the number of MPI-processes. The decrease in running time with the number of threads is less dominant. However, other applications may exhibit a different behavior.

Figure 6: real running time on eight and 32 nodes depending on the number of MPI processes and the number of threads.

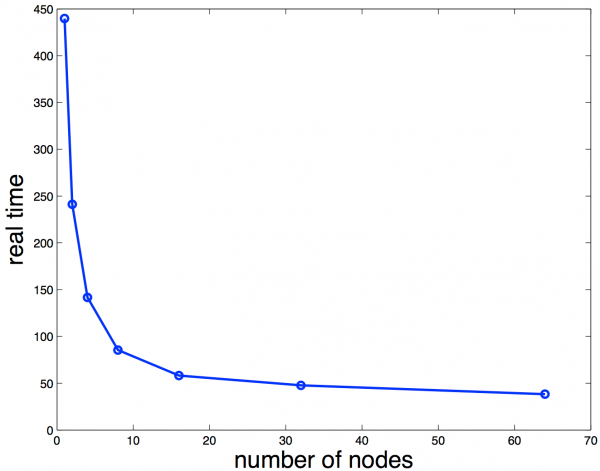

Figure 7: real running time for 16 tasks (MPI processes) per node and one thread depending on the number of nodes.

Figure 7: real running time for 16 tasks (MPI processes) per node and one thread depending on the number of nodes.