Approvals: 0/1

VSC-3 – supercomputer

- Article written by Claudia Blaas-Schenner (VSC Team) <html><br></html>(last update 2019-10-08 by cb).

OUTLINE:

- VSC – Vienna Scientific Cluster<html><br></html>$~$

- Supercomputers for beginners –<html><br></html>– introducing VSC-3 to our (new) users

- Supercomputers for beginners – what is a supercomputer ?

- VSC-3 – what does it look like ?

- VSC-3 – components of a supercomputer

- Parallel hardware architectures –<html><br></html>– which parallel programming models can be used ?

- VSC-3 compute nodes

- VSC-3 node-interconnect

- VSC-3 ping-pong – intra-node vs. inter-node

VSC – Vienna Scientific Cluster

- The VSC is a joint high performance computing (HPC) facility of Austrian universities.

- Our mission: Within the limits of available resources we satisfy the HPC needs of our users.

- VSC is primarily devoted to research.

- Who can use VSC? Scientific personnel of the partner universities, see: http://vsc.ac.at/access <html><nobr></html>VSC is open to users<html></nobr></html> from other academic and research institutions.

- Projects (test, funded, …): Access to VSC is granted on the basis of peer-reviewed projects.

- Project manager (= usually your supervisor): Project application, extensions, creates user accounts, …

- Publications: Please acknowledge VSC and add publications <html><font color=#cc3300></html>$~~$➠$~~$<html></font></html> visible on VSC homepage !

| VSC links: | Information provided: |

|---|---|

| <html><font color=#cc3300></html>➠$~~$<html></font></html>http://vsc.ac.at | VSC homepage (general info) |

| <html><font color=#cc3300></html>➠$~~$<html></font></html>https://service.vsc.ac.at | VSC service website (application) |

| <html><font color=#cc3300></html>➠$~~$<html></font></html>https://wiki.vsc.ac.at | VSC user documentation |

<html><font color=#cc3300></html>➠$~~$<html></font></html> | VSC user support $~$&$~$ contact |

- VSC Training Courses: <html><br></html><html><font color=#cc3300></html>➠$~~$<html></font></html>http://vsc.ac.at/training <html><br></html>VSC course slides: <html><br></html><html><font color=#cc3300></html>➠$~~$➠$~~$➠$~~$<html></font></html>VSC-Linux <html><br></html><html><font color=#cc3300></html>➠$~~$➠$~~$➠$~~$<html></font></html>VSC-Intro

Supercomputers for beginners

- What is a supercomputer ?

- A supercomputer is a computer with a high level of computing performance compared to a general-purpose computer. Performance of a supercomputer is measured in floating-point operations per second (FLOPS)… [from Wikipedia] <html><br></html><html><br></html>

- A supercomputer is listed in the TOP500

| TOP500 | GREEN500 | (#1 TOP500) | ||

|---|---|---|---|---|

| VSC-1 (2009) | 35 TFlop/s | 156 (11/2009) | 94 (06/2009) | 1.8 PFlop/s #1 (11/2009) |

| VSC-2 (2011) | 135 TFlop/s | 56 (06/2011) | 71 (06/2011) | 8 PFlop/s #1 (06/2011) |

| VSC-3 (2014) | 596 TFlop/s | 85 (11/2014) | 86 (11/2014) | 33 PFlop/s #1 (11/2014) |

| VSC-3 (………) | 596 TFlop/s | 460 (11/2017) | 175 (11/2017) | 93 PFlop/s #1 (11/2017) |

| VSC-4 (2019) | 2.7 PFlop/s | 82 (06/2019) | ——– | 148 PFlop/s #1 (06/2019) |

VSC-3 – what does it look like ?

VSC-3 – what does it look like ? – inside

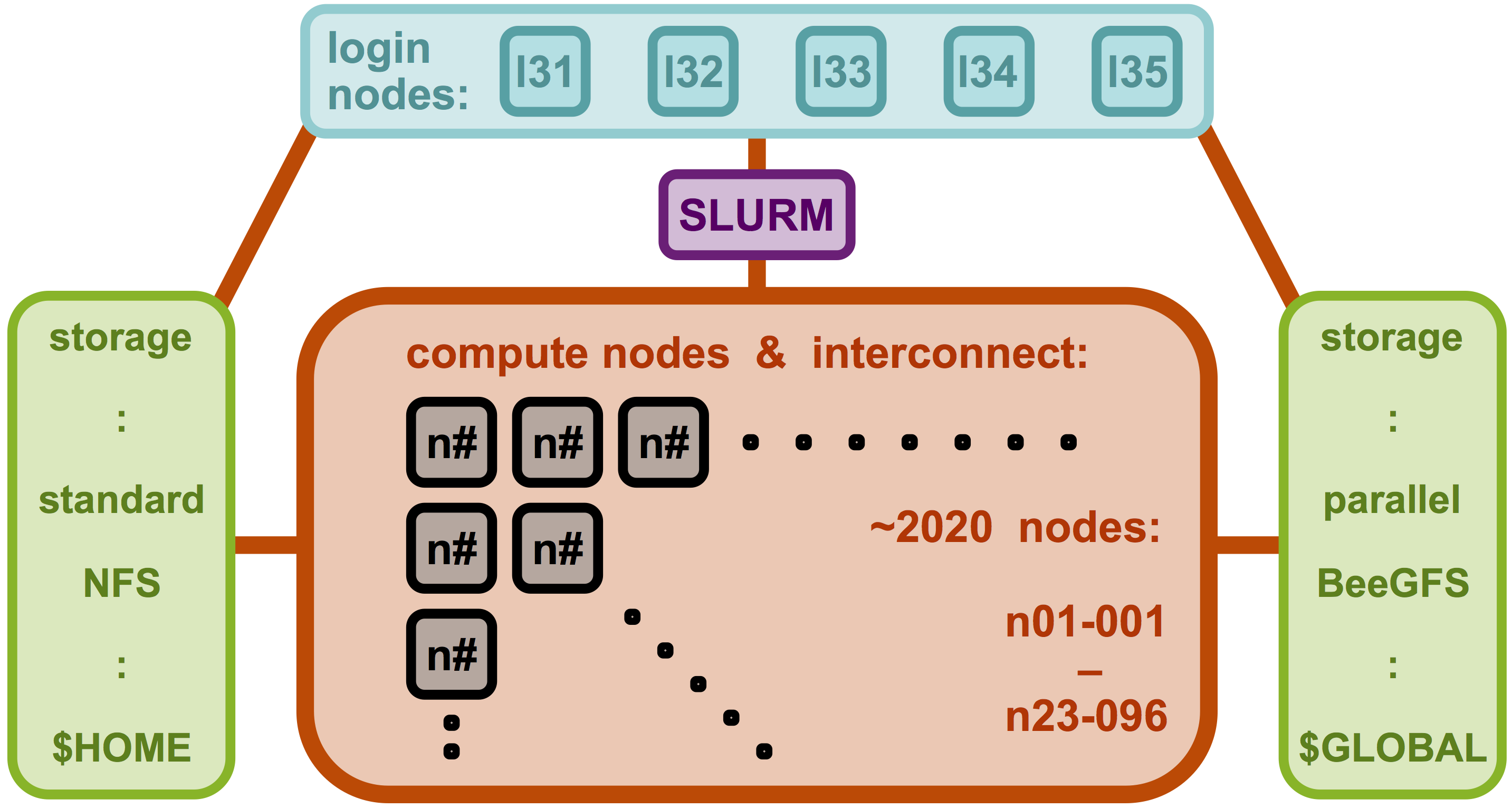

VSC-3 – components of a supercomputer

<html><br></html>$~$

- login nodes vs. compute nodes

- shared (login, storage) vs. user exclusive (compute nodes)

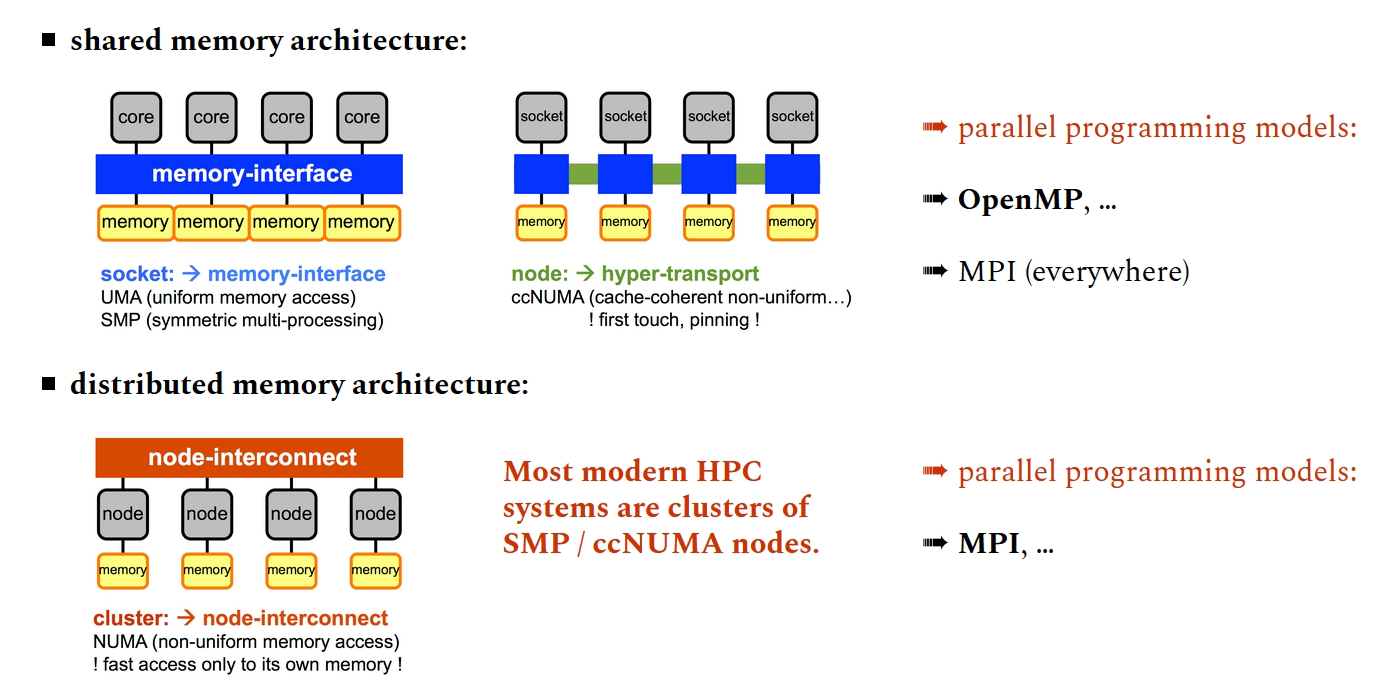

Parallel hardware architectures

VSC-3 compute nodes

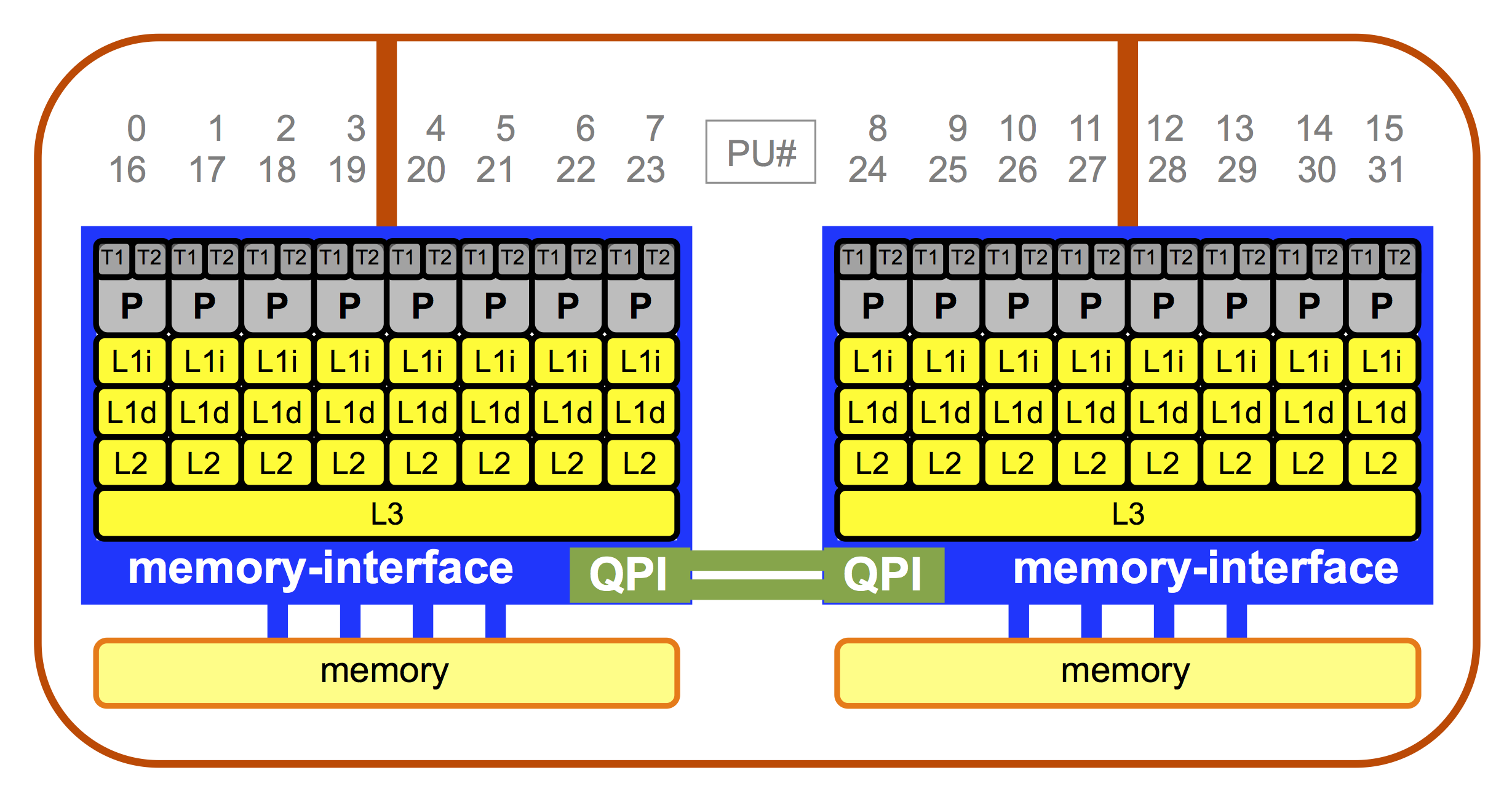

- most nodes are Intel Xeon IvyBridge (E5-2650 v2 @ 2.60GHz) with 64 GB, some with 128 / 256 GB, <html><br></html>plus special types of hardware

- 1 node $~$ = $~$ 2 sockets (CPUs), 8 cores per socket (P), 2 threads per core (T1/T2) $~$ + $~$ 2 HCAs

- intra-socket: 59.7 GB/s (max), inter-socket via QPI (QuickPath interconnect): 32 GB/s (max)

- inter-node via dual rail Intel QDR-80: 4 GB/s (max) / 3.4 GB/s (eff) per HCA (host channel adapter)

- <html><font color=#cc3300></html>Avoiding slow data paths is the key to most performance optimizations! $~~~$ ➠ $~$Affinity matters!$~$<html></font></html>

processing units (PU#) $~~~$ <html><font color=#cc3300></html> ➠ pinning<html></font></html> <html><br></html>see: article on SLURM and pinning@Wiki

memory hierarchy (mem_0064 nodes): <html><br></html>L1 instruction cache: 32 kB, private to core <html><br></html>L1 data cache: 32 kB, private to core <html><br></html>L2 cache: 256 kB, private to core (unified) <html><br></html>L3 cache: 20 MB, shared by 8 cores of 1 socket <html><br></html>memory: 32 GB per socket

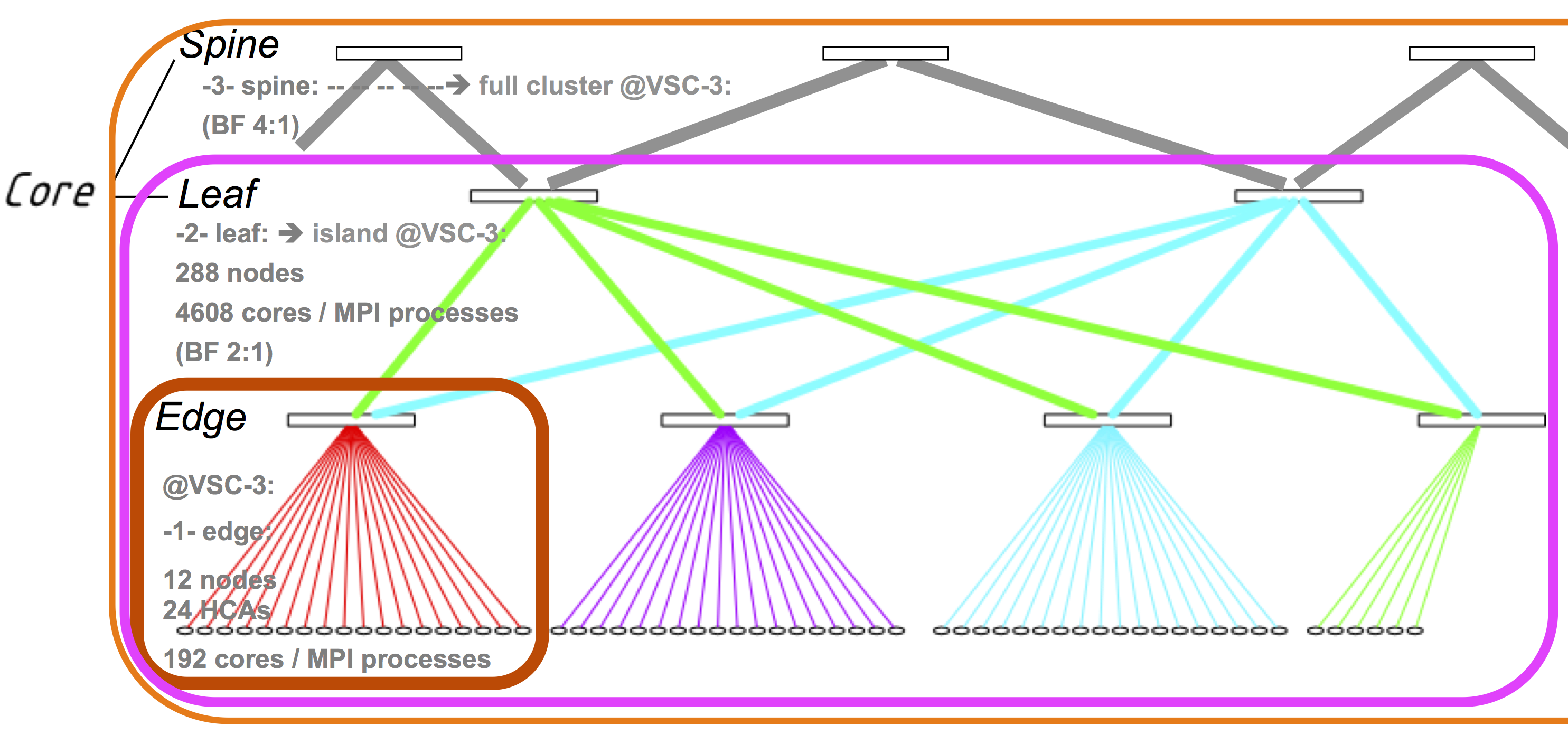

VSC-3 node-interconnect

IB fabric = dual rail Intel QDR-80 = 3-level fat-tree (BF: 2:1 / 4:1) – schematic figure / numbers only <html><br></html><html><font color=#ffffff></html>IB fabric = dual rail Intel <html></font></html> (blocking – BF: down- : up-links – might introduce an additional latency)

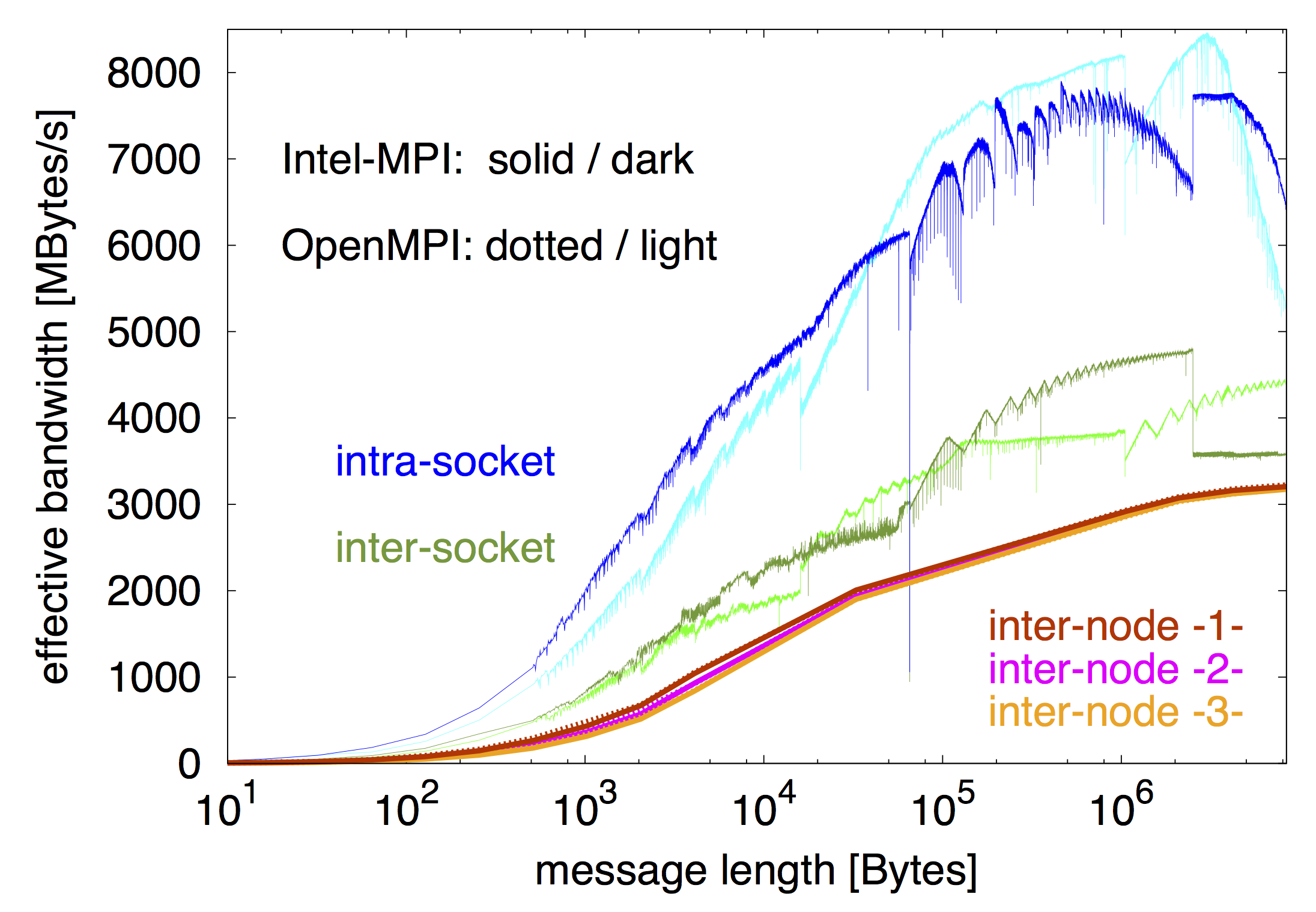

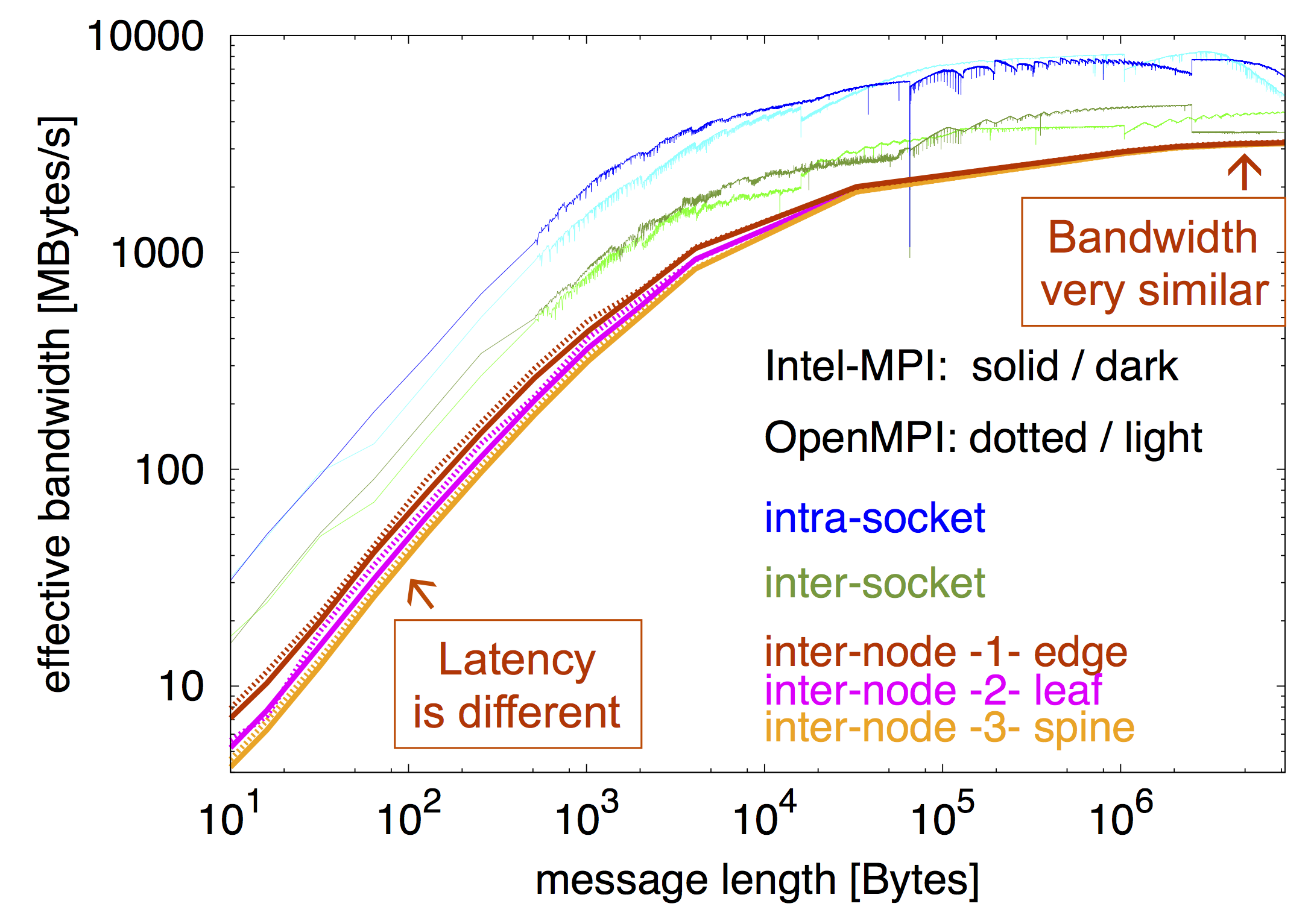

VSC-3 ping-pong – intra-node vs. inter-node

- 1 node $~$ = $~$ 2 sockets with 8 cores per socket $~$ + $~$ 2 HCAs

- inter-node $~$ = $~$ IB fabric = dual rail Intel QDR-80 = 3-level fat-tree (BF: 2:1 / 4:1)

- ping-pong benchmark $~$ = $~$ module load $~$ intel/16.0.3 $~$ intel-mpi/5.1.3 $~$ | $~$ openmpi/1.10.2 $~$ (1 HCA)

<HTML><ul></HTML> <HTML><li></HTML><HTML><p></HTML>MPI latency & bandwidth (plus typical values for comparison):<HTML></p></HTML>

| VSC-3: | latency [μs] | typical values for: | latency | bandwidth | |

|---|---|---|---|---|---|

| <html><font color=#0000ff></html>intra-socket<html></font></html> | <html><font color=#0000ff></html>0.3 μs<html></font></html> | <html><font color=#696969></html>L1 cache<html></font></html> | <html><font color=#696969></html>1–2 ns<html></font></html> | <html><font color=#696969></html>100 GB/s<html></font></html> | |

| <html><font color=#6b8e23></html>inter-socket<html></font></html> | <html><font color=#6b8e23></html>0.7 μs<html></font></html> | <html><font color=#696969></html>L2/L3 c.<html></font></html> | <html><font color=#696969></html>3–10 ns<html></font></html> | <html><font color=#696969></html>50 GB/s<html></font></html> | |

| <html><font color=#cc3300></html>IB -1- edge<html></font></html> | <html><font color=#cc3300></html>1.4 μs<html></font></html> | <html><font color=#696969></html>memory<html></font></html> | <html><font color=#696969></html>100 ns<html></font></html> | <html><font color=#696969></html>10 GB/s<html></font></html> | |

| <html><font color=#ff00ff></html>IB -2- leaf<html></font></html> | <html><font color=#ff00ff></html>1.8 μs<html></font></html> | <html><font color=#696969></html>HPC networks<html></font></html> | |||

| <html><font color=#ffa500></html>IB -3- spine<html></font></html> | <html><font color=#ffa500></html>2.3 μs<html></font></html> | <html><font color=#696969></html>(per node / 2 HCAs)<html></font></html> | <html><font color=#696969></html>1–10 μs<html></font></html> | <html><font color=#696969></html>1–8 GB/s<html></font></html> |

<HTML></li></HTML><HTML></ul></HTML>