Approvals: 0/1

Node access and job control

- Article written by Jan Zabloudil (VSC Team) <html><br></html>(last update 2017-10-09 by jz).

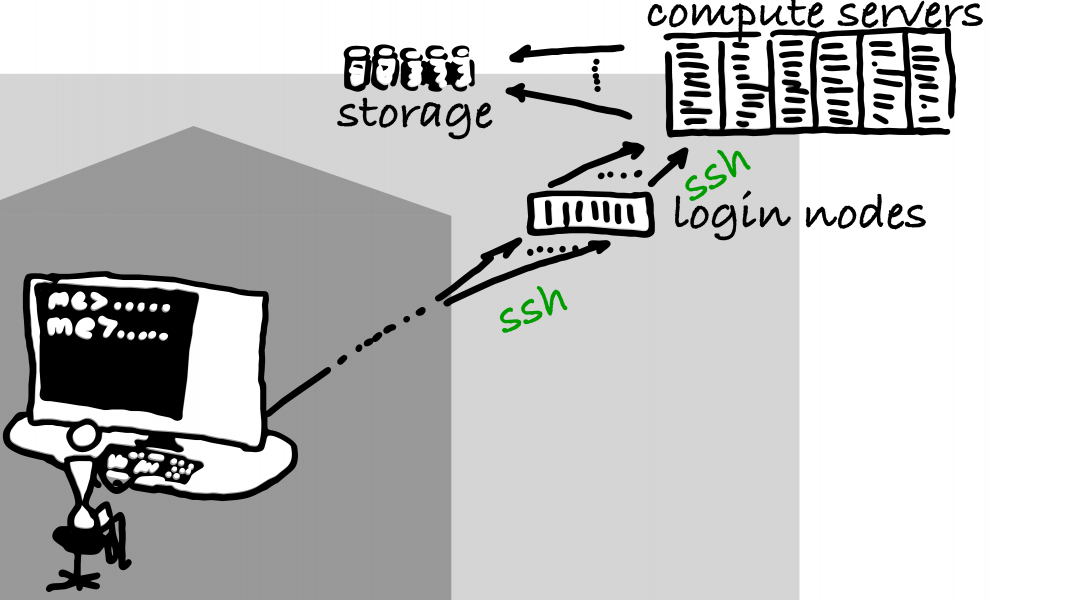

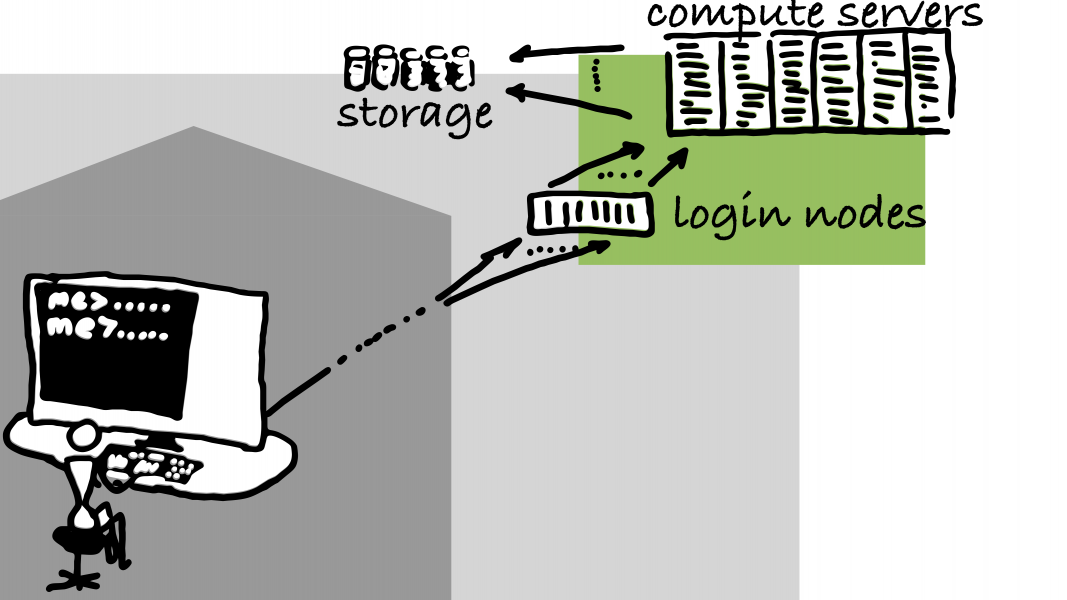

Node access

Node access

Node access

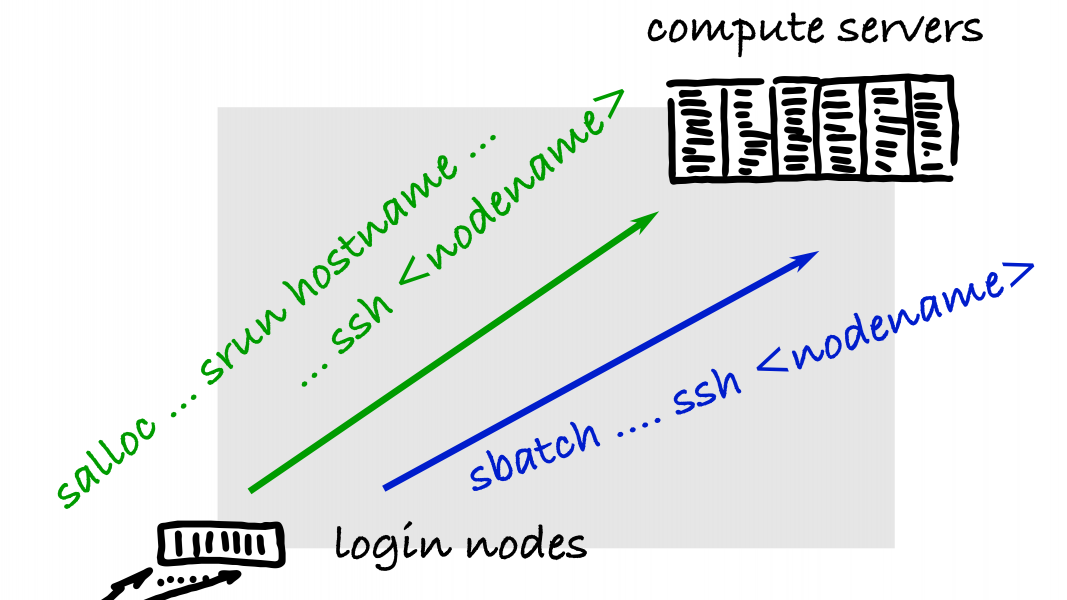

1) Job scripts: sbatch

(DEMO)

[me@l31]$ sbatch job.sh # submit batch job [me@l31]$ squeue -u me # find out on which node(s) job is running [me@l31]$ ssh <nodename> # connect to node [me@n320-038]$ ... # connected to node named n320-038

more about from Claudia

Interactive jobs: salloc

<HTML><ol start=“2” style=“list-style-type: decimal;”></HTML> <HTML><li></HTML>with salloc EXAMPLE: linpack (DEMO)<HTML></li></HTML><HTML></ol></HTML>

~/examples/06_node_access_job_control/linpack [me@l31 linpack]$ salloc -J me_hpl -N 1 # allocate "1" node(s) [me@l31 linpack]$ squeue -u me # find out which node(s) is(are) allocated [me@l31 linpack]$ srun hostname # clean & check functionality & licence [me@l31 linpack]$ ssh n3XX-YYY # connect to node nXX-YYY [me@n3XX-YYY ~]$ module purge [me@n3XX-YYY ~]$ module load intel/17 intel-mkl/2017 intel-mpi/2017 [me@n3XX-YYY ~]$ cd my_directory_name/linpack [me@n3XX-YYY linpack]$ mpirun -np 16 ./xhpl

stop the process (Ctlr+C or Strg+C)

[me@n3XX-YYY linpack]$ exit [me@l31 linpack]$ exit

Interactive jobs: salloc

notes:

[me@l31]$ srun hostname

NOTE: the slurm prolog script is not automatically run in this case. The prolog performs basic tasks such as

- clean up from previous job,

- checking basic node functionality,

- adapting firewall settings to access license servers.

Exercise 2: job script

1.) Now submit the same job with a job script:

[training@l31 my_directory_name]$ sbatch job.sh [training@l31 my_directory_name]$ squeue -u training JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 5262833 mem_0064 hpl training R 0:04 1 n341-001

2.) Login to the node listed under NODELIST and perform commands which will give you information about your job

[training@l31 my_directory_name]$ ssh n341-001 Last login: Fri Apr 21 08:43:07 2017 from l31.cm.cluster

Exercise 2: job script, cont.

[training@n341-001 ~]$ top

top - 13:06:06 up 19 days, 2:57, 2 users, load average: 12.42, 4.16, 1.51 Tasks: 442 total, 17 running, 425 sleeping, 0 stopped, 0 zombie %Cpu(s): 0.1 us, 0.0 sy, 0.0 ni, 99.9 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem : 65940112 total, 62756072 free, 1586292 used, 1597748 buff/cache KiB Swap: 0 total, 0 free, 0 used. 62903424 avail Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 12737 training 20 0 1915480 68580 18780 R 106.2 0.1 0:12.57 xhpl 12739 training 20 0 1915480 68372 18584 R 106.2 0.1 0:12.55 xhpl 12742 training 20 0 1856120 45412 13172 R 106.2 0.1 0:12.57 xhpl 12744 training 20 0 1856120 45412 13172 R 106.2 0.1 0:12.52 xhpl 12745 training 20 0 1856120 45464 13224 R 106.2 0.1 0:12.56 xhpl ...

- 1 … show cpu core load

- Shift+H … show threads

3.) Cancel the job:

[training@n341-001 ~]$ exit [training@l31 ~]$ scancel <job ID>

<HTML> </div> </div> </HTML>

Prolog Failure

If a check in the SLURM prolog script fails on one of the nodes assigned to your job, you will see a message like the following in your slurm-$JOBID.out file:

Error running slurm prolog: 228

The error code (228) tells you what kind of check has failed. A list of currently existing error codes is:

ERROR_MEMORY=200 ERROR_INFINIBAND_HW=201 ERROR_INFINIBAND_SW=202 ERROR_IPOIB=203 ERROR_BEEGFS_SERVICE=204 ERROR_BEEGFS_USER=205 ERROR_BEEGFS_SCRATCH=206 ERROR_NFS=207 ERROR_USER_GROUP=220 ERROR_USER_HOME=221 ERROR_GPFS_START=228 ERROR_GPFS_MOUNT=229 ERROR_GPFS_UNMOUNT=230

- node will be drained (unavailable for subsequent jobs until fixed)

- resubmit your job

squeue options

VSC-4 > squeue -u $user JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 409879 mem_0096 training sh R 1:10:59 3 n407-030,n411-[007-008] VSC-4 > squeue -p mem_0096 | more JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) .......................................................................... 407141 mem_0096 interfac mjech3 PD 0:00 4 (Priority) 409880_[1-6] mem_0096 OP1_2_Fi wiesmann PD 0:00 1 (Resources,Priority) 409879 mem_0096 training sh R 1:30:16 3 n407-030,n411-[007-008] 402078 mem_0096 sim mboesenh R 4-22:46:13 1 n403-007

- optional reformatting via -o, for example,

VSC-4 > squeue -u $user -o "%.18i %.10Q %.12q %.8j %.8u %8U %.2t %.10M %.6D %R" JOBID PRIORITY QOS NAME USER UID ST TIME NODES NODELIST(REASON) 409879 3000982 admin training sh 71177 R 1:34:55 3 n407-030,n411-[007-008]

<HTML> </div> </HTML>

scontrol

VSC-4 > scontrol show job 409879 JobId=409879 JobName=training UserId=sh(71177) GroupId=sysadmin(60000) MCS_label=N/A Priority=3000982 Nice=0 Account=sysadmin QOS=admin JobState=RUNNING Reason=None Dependency=(null) Requeue=0 Restarts=0 BatchFlag=0 Reboot=0 ExitCode=0:0 RunTime=01:36:35 TimeLimit=10-00:00:00 TimeMin=N/A SubmitTime=2020-10-12T15:50:44 EligibleTime=2020-10-12T15:50:44 AccrueTime=2020-10-12T15:50:44 StartTime=2020-10-12T15:51:07 EndTime=2020-10-22T15:51:07 Deadline=N/A PreemptTime=None SuspendTime=None SecsPreSuspend=0 LastSchedEval=2020-10-12T15:51:07 Partition=mem_0096 AllocNode:Sid=l40:186217 ReqNodeList=(null) ExcNodeList=(null) NodeList=n407-030,n411-[007-008] BatchHost=n407-030 NumNodes=3 NumCPUs=288 NumTasks=3 CPUs/Task=1 ReqB:S:C:T=0:0:*:* TRES=cpu=288,mem=288888M,node=3,billing=288,gres/cpu_mem_0096=288 Socks/Node=* NtasksPerN:B:S:C=0:0:*:* CoreSpec=* MinCPUsNode=1 MinMemoryNode=96296M MinTmpDiskNode=0 Features=(null) DelayBoot=00:00:00 OverSubscribe=NO Contiguous=0 Licenses=(null) Network=(null) Command=(null) WorkDir=/home/fs60000/sh Power= TresPerNode=cpu_mem_0096:96

Accounting: sacct

default:

VSC-4 > sacct -j 409878 JobID JobName Partition Account AllocCPUS State ExitCode ------------ ---------- ---------- ---------- ---------- ---------- -------- 409878 training mem_0096 sysadmin 288 COMPLETED 0:0

adjust output

VSC-4 > sacct -j 409878 -o jobid,jobname,cluster,nodelist,Start,End,cputime,cputimeraw,ncpus,qos,account,ExitCode JobID JobName Cluster NodeList Start End CPUTime CPUTimeRAW NCPUS QOS Account ExitCode ------------ ---------- ---------- --------------- ------------------- ------------------- ---------- ---------- ---------- ---------- ---------- -------- 409878 training vsc4 n407-030,n411-+ 2020-10-12T15:49:42 2020-10-12T15:51:06 06:43:12 24192 288 admin sysadmin 0:0

Accounting: vsc3CoreHours.py

VSC-3 > vsc3CoreHours.py -h usage: vsc3CoreHours.py [-h] [-S STARTTIME] [-E ENDTIME] [-D DURATION] [-A ACCOUNTLIST] [-u USERNAMES] [-uni UNI] [-d DETAIL_LEVEL] [-keys KEYS] getting cpu usage - start - end time optional arguments: -h, --help show this help message and exit -S STARTTIME start time, e.g., 2015-04[-01[T10[:04[:01]]]] -E ENDTIME end time, e.g., 2015-04[-01[T10[:04[:01]]]] -D DURATION duration, display the last D days -A ACCOUNTLIST give comma separated list of projects for which the accounting data is calculated, e.g., p70yyy,p70xxx. Default: primary project. -u USERNAMES give comma separated list of usernames -uni UNI get usage statistics for one university -d DETAIL_LEVEL set detail level; default=0 -keys KEYS show data from qos or user perspective, use either qos or user; default=user

Accounting: vsc3CoreHours.py, cont.

VSC-3 > vsc3CoreHours.py -S 2020-01-01 -E 2020-10-01 -u sh =================================================== Accounting data time range From: 2020-01-01 00:00:00 To: 2020-10-01 00:00:00 =================================================== Getting accounting information for the following account/user combinations: account: sysadmin users: sh getting data, excluding these qos: goodluck, gpu_vis, gpu_compute =============================== account core_h _______________________________ sysadmin 25775.15 _______________________________ total 25775.15

<HTML> <!–# profiling

## hdf5 file

```{.bash} [jz@l31 ~]$ squeue -u jz

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

5115915 mem_0064 bash jz R 40:12 1 n05-071

[jz@l31 ~]$ ls /opt/profiling/slurm/jz/5115915_0_n05-071.h5 /opt/profiling/slurm/jz/5115915_0_n05-071.h5 ``` –> </HTML>