This version (2022/06/20 09:01) was approved by msiegel.

This version (2022/06/20 09:01) was approved by msiegel.This is an old revision of the document!

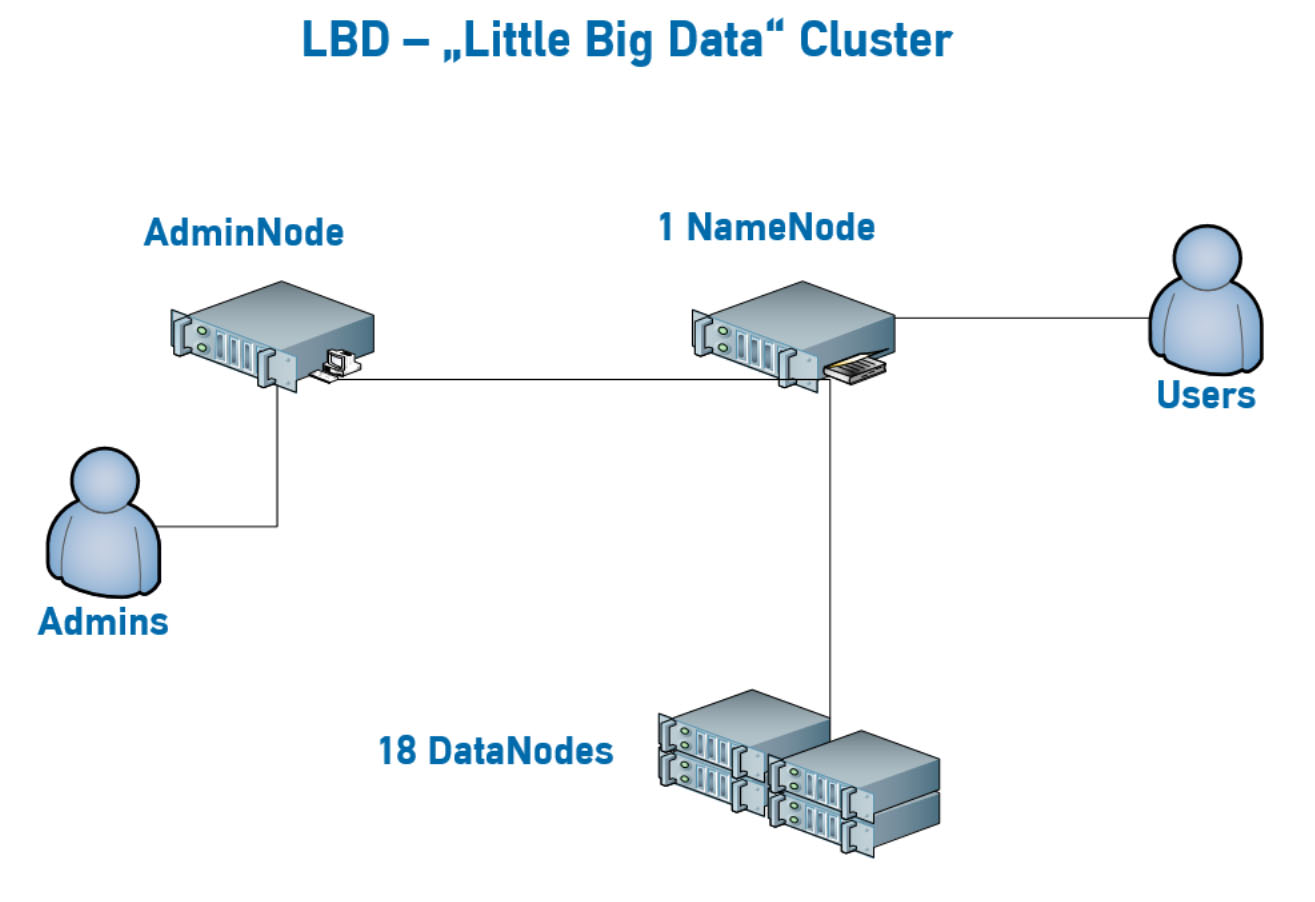

"Little" Big Data (LBD) Cluster

Access

Hardware

LBD has the following hardware setup:

- 2 namenodes

- 18 datanodes

- an administrative server as

- Cloudera Manager server

- backup of administrative data

- a ZFS file server for /home with 300TB of storage space

The login node is reachable from within the tuwien domain under lbd.zserv.tuwien.ac.at. Each of the nodes has

- two XeonE5-2650v4 CPUs with 24 virtual cores (total of 48 cores per node, 864 total worker cores)

- 256GB RAM (total of 4.5TB memory available to worker nodes)

- four hard disks, each with a capacity of 4TB (total of 16TB per node, 288TB total for worker nodes)

Apart from two extra Ethernet devices for external connections, all nodes have the same hardware configurations. All ethernet connections (external and inter-node) support a speed of 10Gb/s.

HDFS configuration

- current version: Hadoop 3

- block size: 128 MiB

- default replication factor: 3

Available software

<html>

<style> table {

border-collapse: collapse; width: 100%;

}

td, th {

border: 1px solid #dddddd; text-align: left; padding: 8px;

}

tr:nth-child(even) {

background-color: #dddddd;

} </style>

<table>

<tr> <th>Name</th> <th>Status</th> <th>Kommentar</th> </tr> <tr> <td>Centos 7</td> <td>Betriebssystem</td> <td>OK</td> </tr> <tr> <td>XCAT</td> <td>Deploymentumgebung</td> <td>OK</td> </tr> </tr> <tr> <td>Cloudera Manager</td> <td>Big Data Deployment</td> <td>OK</td> </tr> <tr> <td>Cloudera HDFS</td> <td>Hadoop distributed file system</td> <td>OK</td> </tr> <tr> <td>Cloudera Accumulo</td> <td>Key/value store</td> <td>OK</td> </tr> <tr> <td>Cloudera HBase</td> <td>Database on top of HDFS</td> <td>OK</td> </tr> <tr> <td>Cloudera Hive</td> <td>Data warehouse using SQL</td> <td>OK</td> </tr> <tr> <td>Cloudera Hue</td> <td>Hadoop user experience, web gui, SQL analytics workbench</td> <td>OK</td> </tr> <tr> <td>Cloudera Impala</td> <td>SQL query engine, used by Hue</td> <td>OK</td> </tr> <tr> <td>Oozie</td> <td>Oozie is a workflow scheduler system to manage Apache Hadoop jobs. Used by Hue</td> <td>OK</td> </tr> <tr> <td>Cloudera Solr</td> <td>open source enterprise search platform, used by Hue, used by Key-Value Store Indexer</td> <td>OK</td> </tr> <tr> <td>Cloudera Key-Value Store Indexer</td> <td>The Key-Value Store Indexer service uses the Lily HBase NRT Indexer to index the stream of records being added to HBase tables. Indexing allows you to query data stored in HBase with the Solr service.</td> <td>OK</td> </tr> <tr> <td>Cloudera Spark (Spark 2)</td> <td> cluster-computing framework mit Scala 2.10 (2.11)</td> <td>OK</td> </tr> <tr> <td>Cloudera YARN (MR2 Included)</td> <td>Yet Another Resource Negotiator (cluster management)</td> <td>OK</td> </tr> </tr> <tr> <td>Cloudera ZooKeeper</td> <td>ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services.</td> <td>OK</td> </tr> <tr> <td>Java 1.8</td> <td>Programmiersprache</td> <td>OK</td> </tr> <tr> <td>Python 3.6.3 (python3.6), Python 3.4.5 (python3.4) Python 2.7.5 (python2)</td> <td>Programmiersprache</td> <td>OK</td> </tr> <tr> <td>Anaconda Python (python)</td> <td>export PATH=/home/anaconda3/bin/:$PATH</td> <td>OK</td> </tr> <tr> <td>Jupyter</td> <td>Notebook, benötigt anaconda</td> <td>OK</td> </tr> <tr> <td>MongoDB | </td> <td>benötigt Plattenplatz, nicht alle Knoten</td> <td>Beta testing</td> </tr> <tr> <td>Kafka</td> <td>Verarbeitung von Datenströmen</td> <td>Beta testing</td> </tr> <tr> <td>Cassandra</td> <td>benötigt Plattenplatz, nicht alle Knoten</td> <td>TODO</td> </tr> <tr> <td>Storm</td> <td>Eher Spark Streaming?</td> <td>auf weitere Anfrage</td> </tr> <tr> <td>Drill</td> <td></td> <td>-</td> </tr> <tr> <td>Flume</td> <td></td> <td>-</td> </tr> <tr> <td>Kudu</td> <td></td> <td>-</td> </tr> <tr> <td>Zeppelin</td> <td></td> <td>-</td> </tr> <tr> <td>Giraph</td> <td></td> <td>TODO</td> </tr> </table>

</html>

Jupyter Notebook

Most users use the LBD cluster via Jupyter Notebooks.

To use a Jupyter notebook, connect to https://lbd.zserv.tuwien.ac.at:8000, and login with your user's credentials.

Start a new notebook, e.g. Python3, PySpark3, a terminal, …

A short example: new → PySpark3

import pyspark import random sc = pyspark.SparkContext(appName="Pi") num_samples = 10000 def inside(p): x, y = random.random(), random.random() return x*x + y*y < 1 count = sc.parallelize(range(0, num_samples)).filter(inside).count() pi = 4 * count / num_samples print(pi) sc.stop()