This version is outdated by a newer approved version. This version (2024/02/29 09:29) is a draft.

This version (2024/02/29 09:29) is a draft.

Approvals: 0/1The Previously approved version (2021/05/27 07:05) is available.

This version (2024/02/29 09:29) is a draft.

This version (2024/02/29 09:29) is a draft.Approvals: 0/1The Previously approved version (2021/05/27 07:05) is available.

This is an old revision of the document!

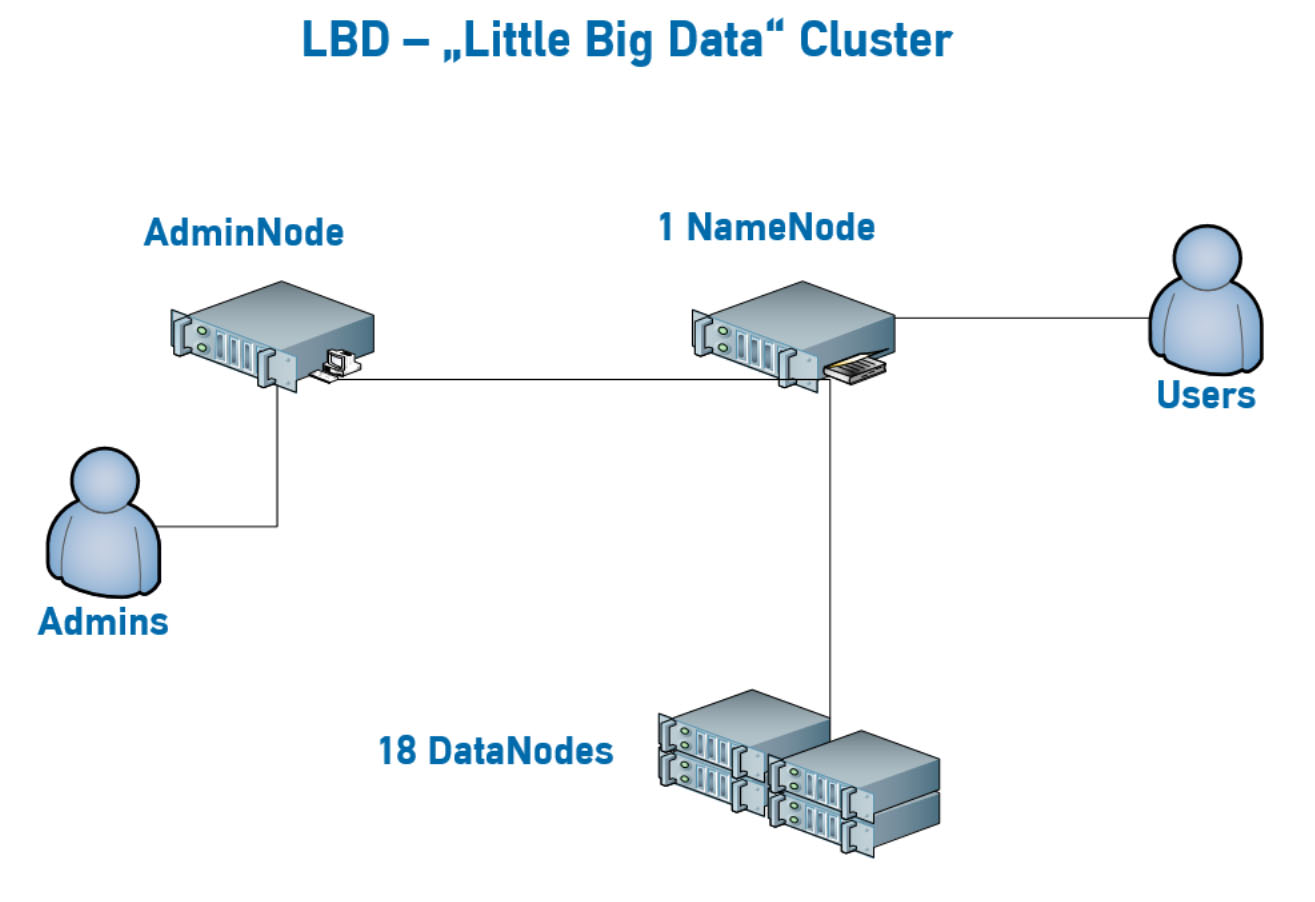

The Big Data Cluster "LBD"

The Big Data Cluster of the TU Wien is used for teaching and research and is using an (extended) Hadoop Software Stack. It is made to exploit easily available parallelism with automatic parallelization of programs written in Python, Java, Scala and R.

Typical programs make use of either

- MapReduce, or

- Spark

for parallelization.

Available software

- Rocky Linux

- Openstack

- Jupyter

- Rstudio Server

- Parallel file sytem: HDFS, Ceph

- Scheduler: YARN

- HBase

- Hive

- Hue

- Kafka

- Oozie

- Solr

- Spark

- Zookeeper

Access

Hardware

LBD has the following hardware setup:

- 1 namenode

- 18 datanodes

The login nodes are reachable from TUnet, the internal net of the TU Wien via https://lbd.tuwien.ac.at or ssh://login.tuwien.ac.at.

Each of the nodes has

- two XeonE5-2650v4 CPUs with 24 virtual cores (total of 48 cores per node, 864 total worker cores)

- 256GB RAM (total of 4.5TB memory available to worker nodes)

- four hard disks, each with a capacity of 4TB (total of 16TB per node, 288TB total for worker nodes)

HDFS configuration

- current version: Hadoop 3

- block size: 128 MiB

- default replication factor: 3

Jupyter Notebook

Most users use the LBD cluster via Jupyter Notebooks.

A short example using Spark and Python:

import pyspark import random sc = pyspark.SparkContext(appName="Pi") num_samples = 10000 def inside(p): x, y = random.random(), random.random() return x*x + y*y < 1 count = sc.parallelize(range(0, num_samples)).filter(inside).count() pi = 4 * count / num_samples print(pi) sc.stop()