This version (2018/10/16 13:34) is a draft.

This version (2018/10/16 13:34) is a draft.Approvals: 0/1

This is an old revision of the document!

SLURM

- Article written by Markus Stöhr (VSC Team) <html><br></html>(last update 2017-10-09 by ms).

Quickstart

script examples/05_submitting_batch_jobs/job-quickstart.sh:

#!/bin/bash #SBATCH -J h5test #SBATCH -N 1 module purge module load gcc/5.3 intel-mpi/5 hdf5/1.8.18-MPI cp $VSC_HDF5_ROOT/share/hdf5_examples/c/ph5example.c . mpicc -lhdf5 ph5example.c -o ph5example mpirun -np 8 ./ph5example -c -v

submission:

$ sbatch job.sh Submitted batch job 5250981

check what is going on:

squeue -u $USER

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 5250981 mem_0128 h5test markus R 0:00 2 n23-[018-019]

Output files:

ParaEg0.h5 ParaEg1.h5 slurm-5250981.out

try on .h5 files:

h5dump

cancel jobs:

scancel <job_id>

or

scancel <job_name>

or

scancel -u $USER

Basic concepts

Queueing system

- job/batch script:

- shell script, that does everything needed to run your calculation

- independent of queueing system

- use simple scripts (max 50 lines, i.e. put complicated logic elsewhere)

- load modules from scratch (purge, then load)

- tell scheduler where/how to run jobs:

- #nodes

- nodetype

- …

- scheduler manages job allocation to compute nodes

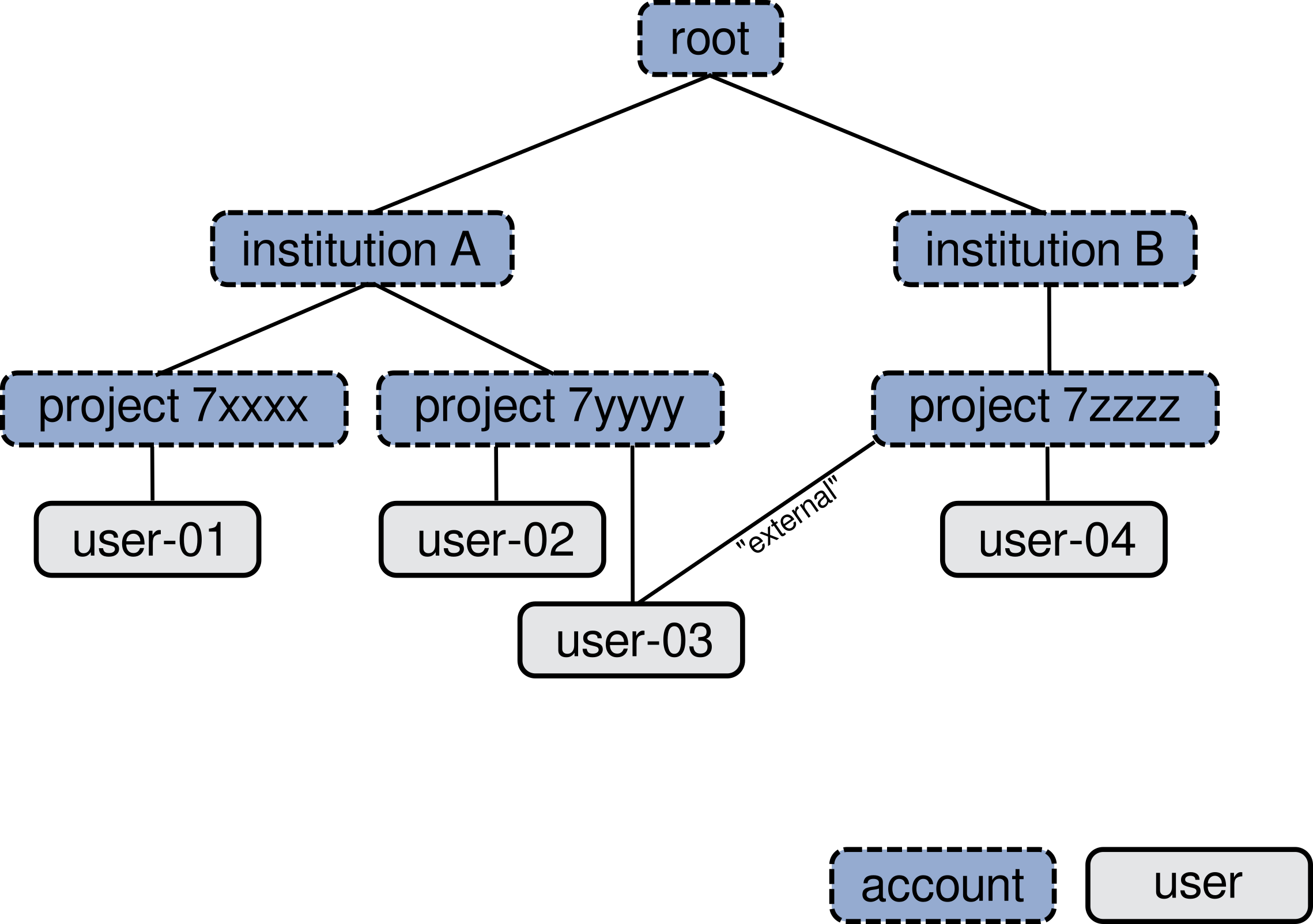

SLURM: Accounts and Users

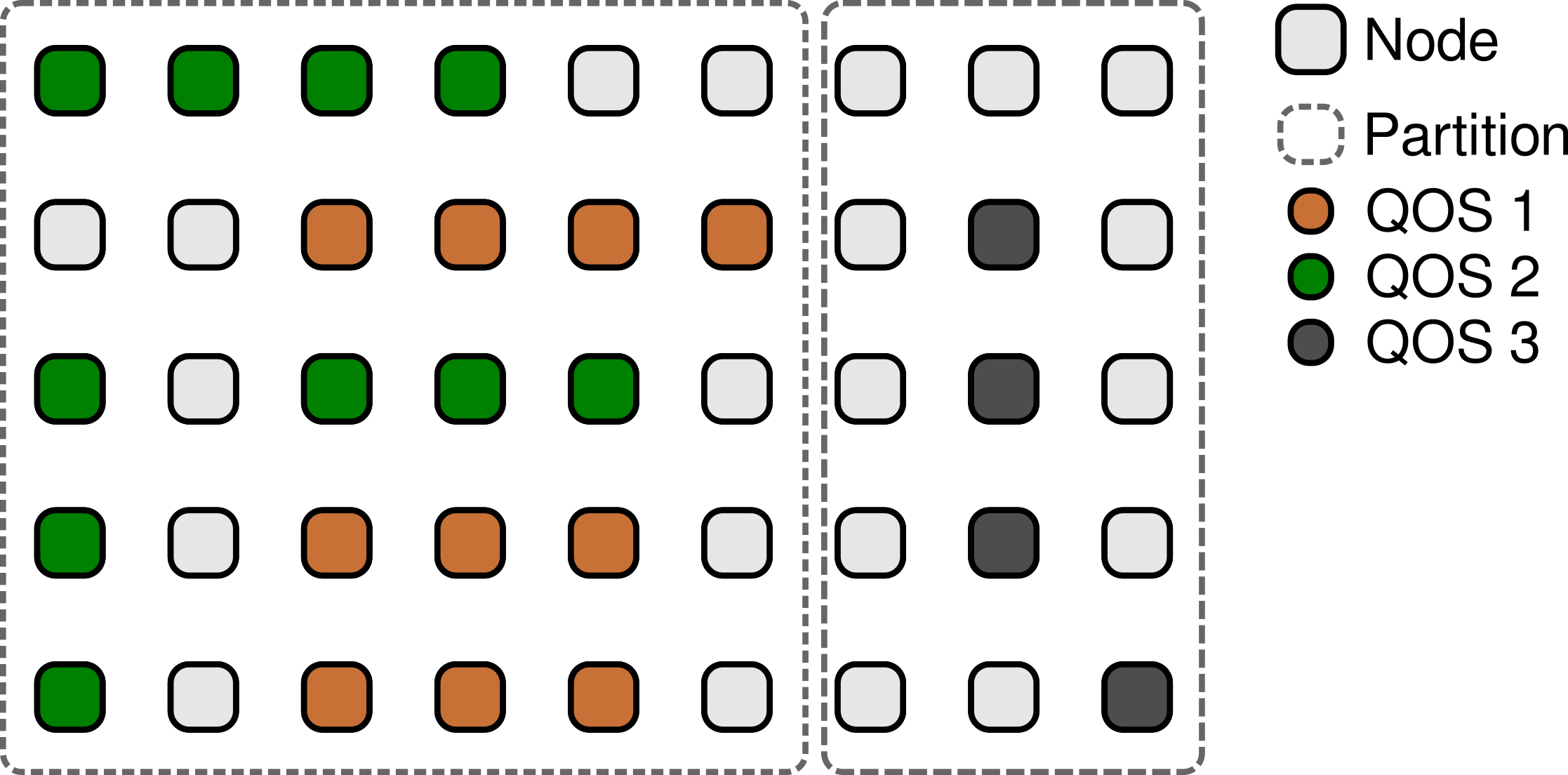

SLURM: Partition and Quality of Service

VSC-3 Hardware Types

| partition | RAM (GB) | CPU | Cores | IB (HCA) | #Nodes |

|---|---|---|---|---|---|

| mem_0064* | 64 | 2x Intel E5-2650 v2 @ 2.60GHz | 2×8 | 2xQDR | 1849 |

| mem_0128 | 128 | 2x Intel E5-2650 v2 @ 2.60GHz | 2×8 | 2xQDR | 140 |

| mem_0256 | 256 | 2x Intel E5-2650 v2 @ 2.60GHz | 2×8 | 2xQDR | 50 |

| vsc3plus_0064 | 64 | 2x Intel E5-2660 v2 @ 2.20GHz | 2×10 | 1xFDR | 816 |

| vsc3plus_0256 | 256 | 2x Intel E5-2660 v2 @ 2.20GHz | 2×10 | 1xFDR | 48 |

| knl | 208 | Intel Phi 7210 @ 1.30GHz | 1×64 | 1xQDR | 4 |

| haswell | 128 | 2x Intel E5-2660 v3 @ 2.60GHz | 2×10 | 1xFDR | 8 |

| binf | 512 - 1536 | 2x Intel E5-2690 v4 @ 2.60GHz | 2×14 | 1xFDR | 17 |

| amd | 128, 256 | AMD EPYC 7551, 7551P | 32,64,128 | 1xFDR | 3 |

* default partition, QDR: Intel Infinipath, FDR: Mellanox ConnectX-3

effective: 10/2018

- + GPU nodes (see later)

- specify partition in job script:

#SBATCH -p <partition>

VSC-3 Hardware Types

- Display information about partitions and their nodes:

sinfo -o %P scontrol show partition mem_0064 scontrol show node n01-001

QOS-Account/Project assignment

1.+2.:

sqos -acc

default_account: p70824

account: p70824

default_qos: normal_0064

qos: devel_0128

goodluck

gpu_gtx1080amd

gpu_gtx1080multi

gpu_gtx1080single

gpu_k20m

gpu_m60

knl

normal_0064

normal_0128

normal_0256

normal_binf

vsc3plus_0064

vsc3plus_0256

QOS-Partition assignment

3.:

sqos

qos_name total used free walltime priority partitions

=========================================================================

normal_0064 1782 1173 609 3-00:00:00 2000 mem_0064

normal_0256 15 24 -9 3-00:00:00 2000 mem_0256

normal_0128 93 51 42 3-00:00:00 2000 mem_0128

devel_0128 10 20 -10 00:10:00 20000 mem_0128

goodluck 0 0 0 3-00:00:00 1000 vsc3plus_0256,vsc3plus_0064,amd

knl 4 1 3 3-00:00:00 1000 knl

normal_binf 16 5 11 1-00:00:00 1000 binf

gpu_gtx1080multi 4 2 2 3-00:00:00 2000 gpu_gtx1080multi

gpu_gtx1080single 50 18 32 3-00:00:00 2000 gpu_gtx1080single

gpu_k20m 2 0 2 3-00:00:00 2000 gpu_k20m

gpu_m60 1 1 0 3-00:00:00 2000 gpu_m60

vsc3plus_0064 800 781 19 3-00:00:00 1000 vsc3plus_0064

vsc3plus_0256 48 44 4 3-00:00:00 1000 vsc3plus_0256

gpu_gtx1080amd 1 0 1 3-00:00:00 2000 gpu_gtx1080amd

naming convention:

| QOS | Partition |

|---|---|

| *_0064 | mem_0064 |

Specification in job script

#SBATCH --account=xxxxxx #SBATCH --qos=xxxxx_xxxx #SBATCH --partition=mem_xxxx

For omitted lines corresponding defaults are used. See previous slides, default partition is “mem_0064”

Sample batch job

default:

#!/bin/bash #SBATCH -J jobname #SBATCH -N number_of_nodes do_my_work

job is submitted to:

- partition mem_0064

- qos normal_0064

- default account

explicit:

#!/bin/bash #SBATCH -J jobname #SBATCH -N number_of_nodes #SBATCH #SBATCH #SBATCH --partition=mem_xxxx #SBATCH --qos=xxxxx_xxxx #SBATCH --account=xxxxxx do_my_work

- must be a shell script (first line!)

- ‘#SBATCH’ for marking SLURM parameters

- environment variables are set by SLURM for use within the script (e.g.

SLURM_JOB_NUM_NODES)

Job submission

sbatch <SLURM_PARAMETERS> job.sh <JOB_PARAMETERS>

- parameters are specified as in job script

- precedence: sbatch parameters override parameters in job script

- be careful to place slurm parameters before job script

Exercises

- try these commands and find out which partition has to be used if you want to run in QOS ‘devel_0128’:

sqos sqos -acc

- find out, which nodes are in the partition that allows running in ‘devel_0128’. Further, check how much memory these nodes have:

scontrol show partition ... scontrol show node ...

- submit a one node job to QOS devel_0128 with the following commands:

hostname free

Bad job practices

- looped job submission (takes a long time):

for i in {1..1000}

do

sbatch job.sh $i

done

- loop in job (sequential mpirun commands):

for i in {1..1000}

do

mpirun my_program $i

done

Array job

- run similar, independent jobs at once, that can be distinguished by one parameter

- each task will be treated as a seperate job

- example (job_array.sh, sleep.sh), start=1, end=30, stepwidth=7:

#!/bin/sh #SBATCH -J array #SBATCH -N 1 #SBATCH --array=1-30:7 ./sleep.sh $SLURM_ARRAY_TASK_ID

- computed tasks: 1, 8, 15, 22, 29

5605039_[15-29] mem_0064 array markus PD 5605039_1 mem_0064 array markus R 5605039_8 mem_0064 array markus R

useful variables within job:

SLURM_ARRAY_JOB_ID SLURM_ARRAY_TASK_ID SLURM_ARRAY_TASK_STEP SLURM_ARRAY_TASK_MAX SLURM_ARRAY_TASK_MIN

limit number of simultanously running jobs to 2:

#SBATCH --array=1-30:7%2

Single core

- use a complete compute node for several tasks at once

- example: job_singlenode_manytasks.sh:

... max_num_tasks=16 ... for i in `seq $task_start $task_increment $task_end` do ./$executable $i & check_running_tasks #sleeps as long as max_num_tasks are running done wait

- ‘&’: start binary in background, script can continue

- ‘wait’: waits for all processes in the background, otherwise script will finish

Array job + single core

...

#SBATCH --array=1-100:32

...

task_start=$SLURM_ARRAY_TASK_ID

task_end=$(( $SLURM_ARRAY_TASK_ID + $SLURM_ARRAY_TASK_STEP -1 ))

if [ $task_end -gt $SLURM_ARRAY_TASK_MAX ]; then

task_end=$SLURM_ARRAY_TASK_MAX

fi

task_increment=1

...

for i in `seq $task_start $task_increment $task_end`

do

./$executable $i &

check_running_tasks

done

wait

Exercises

- files are located in folder

examples/05_submitting_batch_jobs - download or copy sleep.sh and find out what it is doing

- run job_array.sh with tasks 4-20 and stepwidth 3

- start a jobs for job_singlenode_manytasks.sh with max_num_tasks=16 and max_num_tasks=8; compare the job runtimes

Job/process setup

- normal jobs:

| #SBATCH | job environment |

|---|---|

| -N | SLURM_JOB_NUM_NODES |

| –ntasks-per-core | SLURM_NTASKS_PER_CORE |

| –ntasks-per-node | SLURM_NTASKS_PER_NODE |

| –ntasks-per-socket | SLURM_NTASKS_PER_SOCKET |

| –ntasks, -n | SLURM_NTASKS |

- emails:

#SBATCH --mail-user=yourmail@example.com #SBATCH --mail-type=BEGIN,END

- constraints:

#SBATCH -C --constraint #SBATCH --gres= #SBATCH -t, --time=<time> #SBATCH --time-min=<time>

Valid time formats:

- MM

- [HH:]MM:SS

- DD-HH[:MM[:SS]]

- backfilling:

- specify ‘–time’ or ‘–time-min’ that is eligible for your job

- short runtimes may enable the scheduler to use idle nodes waiting for a large job

- get the remaining running time for your job:

squeue -h -j $SLURM_JOBID -o %L

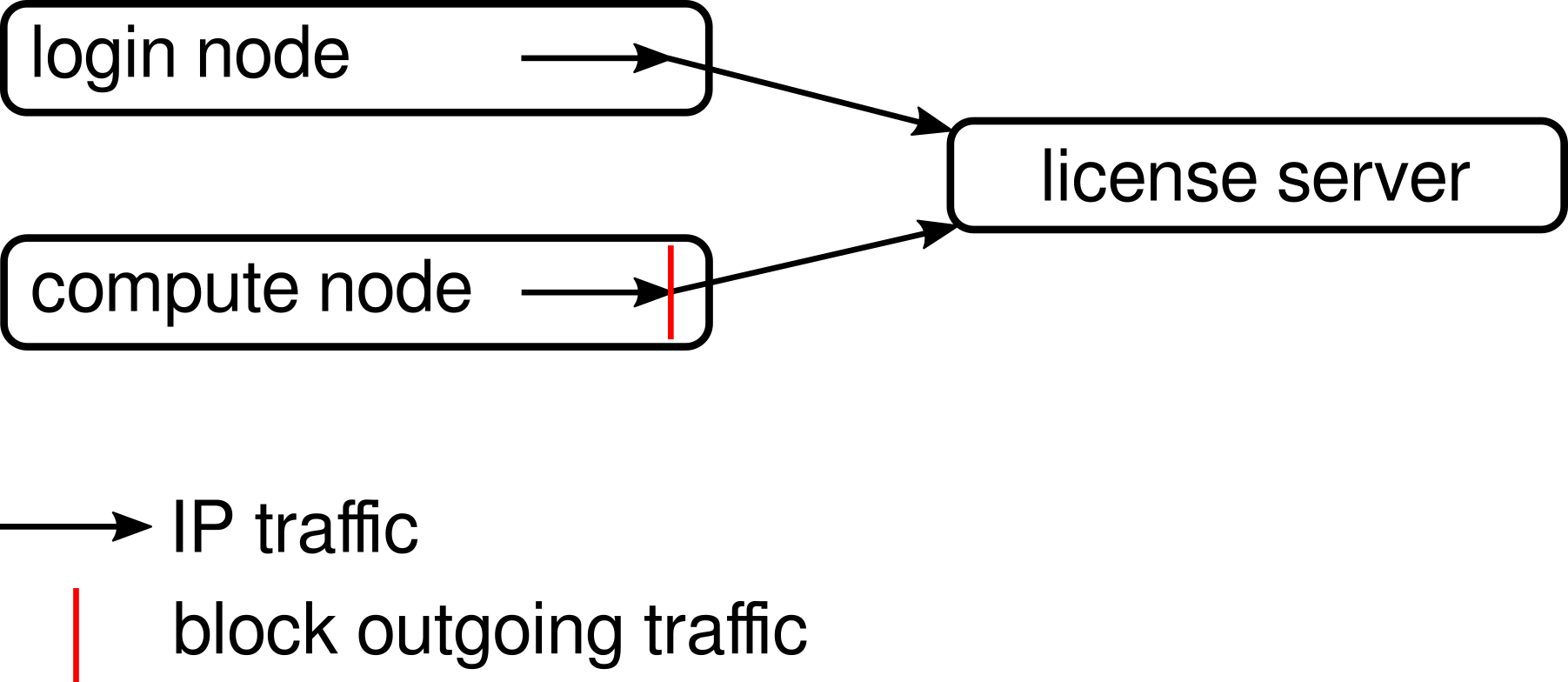

Licenses

slic

Within the job script add the flags as shown with ‘slic’, e.g. for using both Matlab and Mathematica:

#SBATCH -L matlab@vsc,mathematica@vsc

Intel licenses are needed only for compiling code, not for running it!

Reservations of compute nodes

- core-h accounting is done for the full reservation time

- contact us, if needed

- reservations are named after the project id

- check for reservations:

scontrol show reservations

- use it:

#SBATCH --reservation=

Exercises

- check for available reservations. If there is one available, use it

- specify an email address that notifies you when the job has finished

- run the following matlab code in your job:

echo "2+2" | matlab

MPI + NTASKS_PER_NODE + pinning

- understand what your code is doing and place the processes correctly

- use only a few processes per node if memory demand is high

- details for pinning: https://wiki.vsc.ac.at/doku.php?id=doku:vsc3_pinning

Example: Two nodes with two mpi processes each:

srun

#SBATCH -N 2 #SBATCH --tasks-per-node=2 srun --cpu_bind=map_cpu:0,8 ./my_mpi_program

mpirun

#SBATCH -N 2 #SBATCH --tasks-per-node=2 export I_MPI_PIN_PROCESSOR_LIST=0,8 # valid for Intel MPI only! mpirun ./my_mpi_program

Job dependencies

- Submit first job and get its <job id>

- Submit dependent job (and get <job_id>):

#!/bin/bash #SBATCH -J jobname #SBATCH -N 2 #SBATCH -d afterany:<job_id> srun ./my_program

<HTML><ol start=“3” style=“list-style-type: decimal;”></HTML> <HTML><li></HTML>continue at 2. for further dependent jobs<HTML></li></HTML><HTML></ol></HTML>