This version (2017/10/09 14:08) is a draft.

Approvals: 0/1

Approvals: 0/1

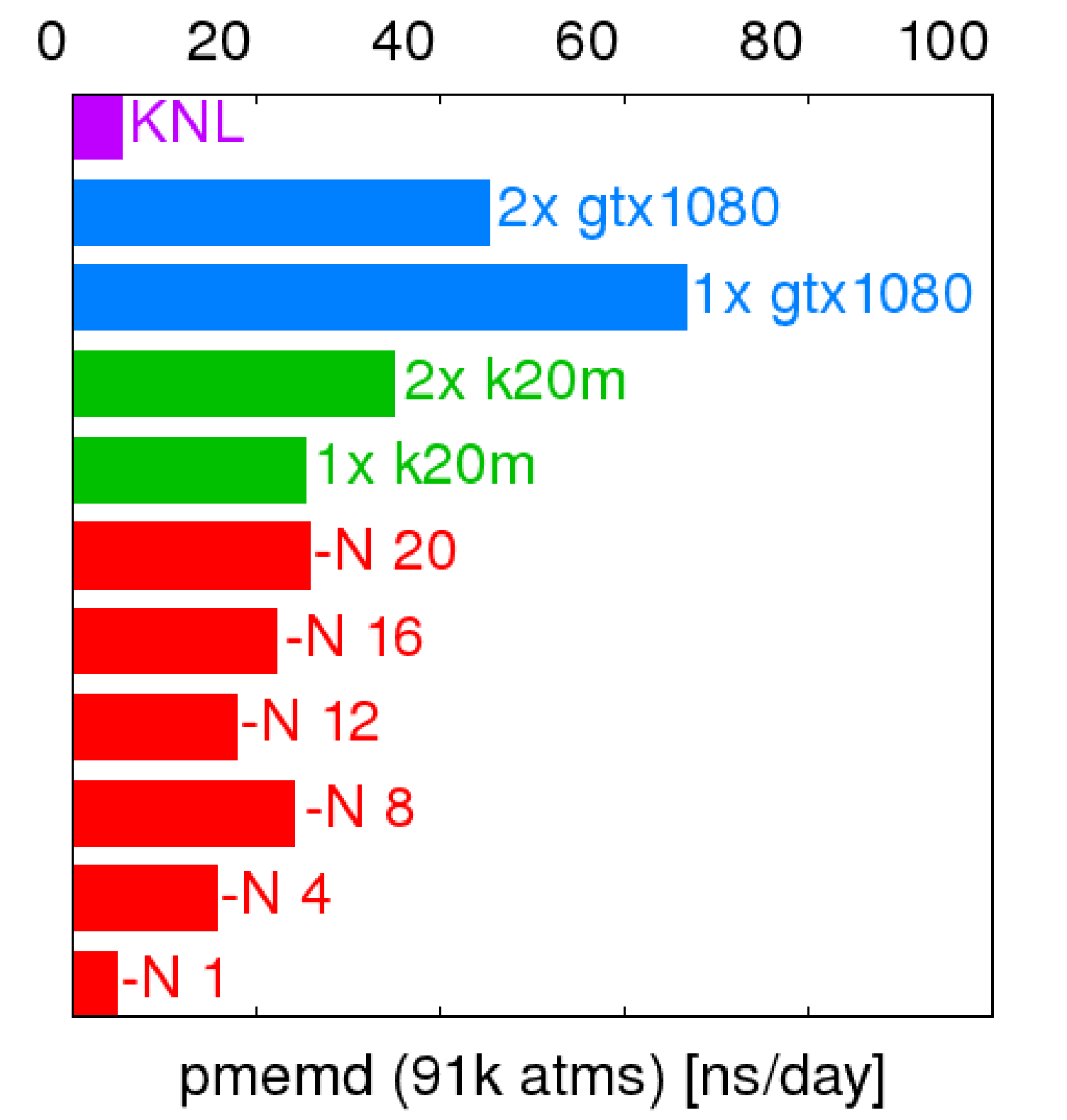

Special types of hardware (GPUs, KNLs) available & how to access them

- Article written by Siegfried Höfinger (VSC Team) <html><br></html>(last update 2017-04-27 by sh).

TOP500 List Nov 2016

<HTML> <!–slide 2–> </HTML>

Components on VSC-3

<HTML> <!–slide 3–> </HTML>

Working on GPU nodes

Interactive mode

1. salloc -N 1 -p gpu --qos=gpu_compute -C gtx1080 --gres=gpu:1 (...perhaps -L intel@vsc)

2. squeue -u training

3. srun -n 1 hostname (...while still on the login node !)

4. ssh n25-012 (...or whatever else node had been assigned)

5. module load cuda/8.0.27

cd ~/examples/09_special_hardware/gpu_gtx1080/matrixMul

nvcc ./matrixMul.cu

./a.out

cd ~/examples/09_special_hardware/gpu_gtx1080/matrixMulCUBLAS

nvcc matrixMulCUBLAS.cu -lcublas

./a.out

6. nvidia-smi

7. /opt/sw/x86_64/glibc-2.17/IntelXeonE51620v3/cuda/8.0.27/NVIDIA_CUDA-8.0_Samples/

1_Utilities/deviceQuery/deviceQuery

<HTML> <!–slide 4–> </HTML>

Working on GPU nodes cont.

SLURM submission

#!/bin/bash # usage: sbatch ./gpu_test.scrpt # #SBATCH -J gtx1080 #SBATCH -N 1 #SBATCH --partition=gpu #SBATCH --qos=gpu_compute #SBATCH -C gtx1080 #SBATCH --gres=gpu:1 module purge module load cuda/8.0.27 nvidia-smi /opt/sw/x86_64/glibc-2.17/IntelXeonE51620v3/cuda/8.0.27/NVIDIA_CUDA-8.0_Samples/1_Utilities/deviceQuery/deviceQuery

<html><font color=“navy”></html>Exercise/Example/Problem:<html></font></html> <html><br/></html> Using interactive mode or batch submission, figure out whether we have ECC enabled on GPUs of type gtx1080 ?

<HTML> <!–slide 5–> </HTML>

Working on KNL nodes

Interactive mode

1. salloc -N 1 -p knl --qos=knl -C knl -L intel@vsc

2. squeue -u training

3. srun -n 1 hostname

4. ssh n25-050 (...or whatever else node had been assigned)

5. module purge

6. module load intel/17.0.2

cd ~/examples/09_special_hardware/knl

icc -xHost -qopenmp sample.c

export OMP_NUM_THREADS=16

./a.out

<HTML> <!–slide 6–> </HTML>

Working on KNL nodes cont.

SLURM submission

#!/bin/bash # usage: sbatch ./knl_test.scrpt # #SBATCH -J knl #SBATCH -N 1 #SBATCH --partition=knl #SBATCH --qos=knl #SBATCH -C knl #SBATCH -L intel@vsc module purge module load intel/17.0.1 cat /proc/cpuinfo export OMP_NUM_THREADS=16 ./a.out

<html><font color=“#cc3300”></html>Exercise/Example/Problem:<html></font></html> <html><br/></html> Given our KNL model, can you determine the current level of hyperthreading, ie 2x, 3x, 4x, whatever-x ?

<HTML> <!–slide 7–> </HTML>