This version (2020/10/20 09:13) is a draft.

Approvals: 0/1

Approvals: 0/1

Storage Technologies - Welcome

“Computers are like Old Testament gods; lots of rules and no mercy.” (Joseph Campbell)

<!– “Simple things should be simple. Complicated things should be possible.” (Alan Kay) –!>

<!– “Computer sciene is not about machines, in the same way that astronomy is not about telescopes. […] Science is not about tools, it is about how we use them and what we find out when we do.” (Michael R. Fellows) –!>

Storage Technologies - Contents

- Hardware Basics

- Memory Hierarchy

- Storage Devices

- Flash-Memories

- Harddisks

- Magnetic Tapes

- Multi Tiered Storages / Hierarchical Storage Systems

- File Systems

- Local

- Network

- Distributed

Storage Technologies - Hardware Basics

Storage Technologies - Hardware Basics

- CPU

- Central Processing Unit

- Controls Operation and performs data processing

- Registers and Caches for fast data access

- Main Memory

- Stores data and programs

- Typically volatile

- I/O Modules

- Move data between CPU/Memory and external devices

Storage Technologies - Hardware Basics (II)

- System Bus

- Provides Communication among processor, memory and I/O modules

- ISA, PCI, AGP, PCI-Express, …

- External Devices

- I/O Controllers (HBA / RAID)

- Network Controllers (Ethernet, Fibre Channel, Infiniband)

- Human Interface Devices (Keyboard, Mouse, Screen, etc.)

Storage Technologies - Direct Memory Access

- Traditionally copying of data from/to devices was done with cpu registers

- Costs a lot of clock cycles that could be used for processing (up to 40 clock cycles per word)

- DMA - Direct Memory Access

- DMA-C is integrated in modern chipsets

- Moves data from external devices to memory and vice versa

- Informs the system when copying is done (interrupt)

- Still some cycles needed for communication with the controller

- Performance gets better the more data is transferred. Byte-wise DMA is a bad idea.

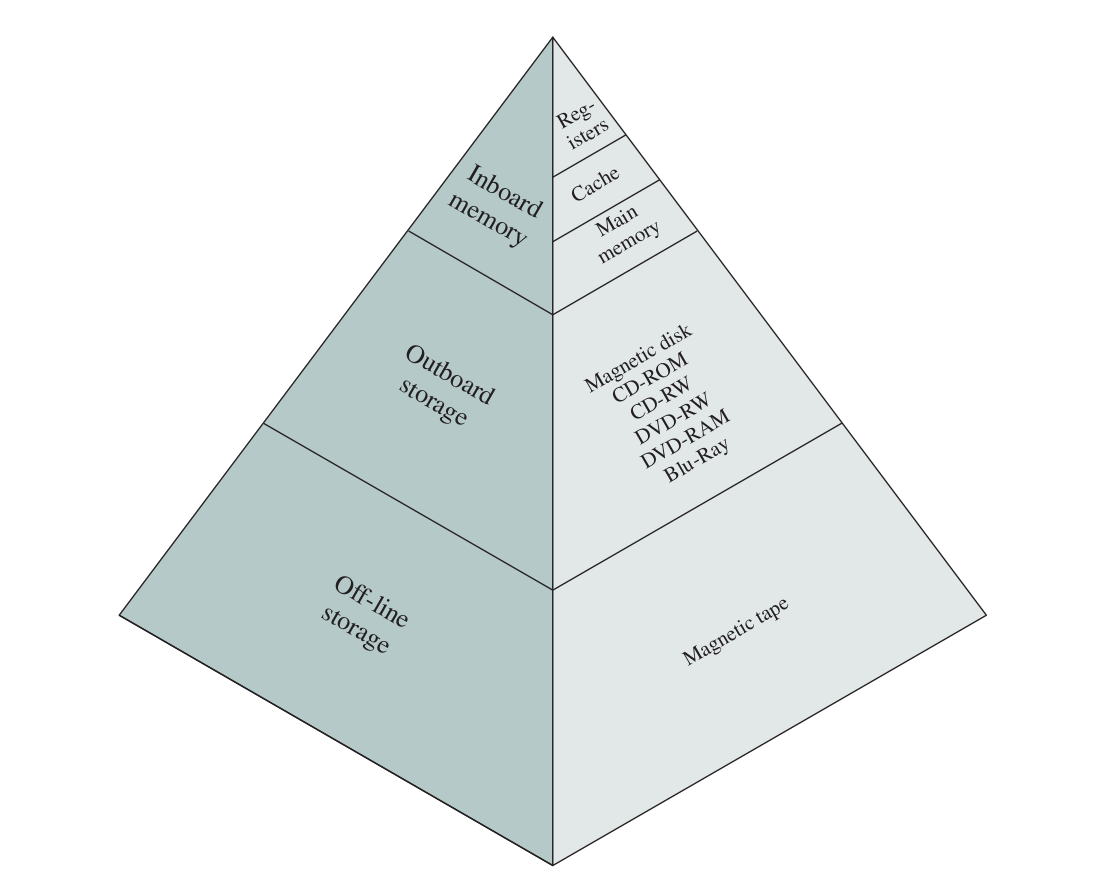

Memory Hierarchy (I)

Memory Hierarchy (II)

- Inboard Memory

- Registers

- Caches

- RAM

- Outboard Storage

- Flash-Drives

- Disks

- Blu-Ray / DVD / CDRom

- Off-Line Storage

- Magnetic Tapes

Memory Hierarchy (III)

- Why?

- SRAM is expensive

- Trade-off among speed, cost, size, and power consumption

- Strategies

- Caching

- Prefetching

- Need data locality

Memory Hierarchy (IV)

- As one goes down the hierarchy…

- Decreasing cost per bit

- Increasing capacity

- Decreasing frequency of access

- Increasing access time

Memory Hierarchy (V)

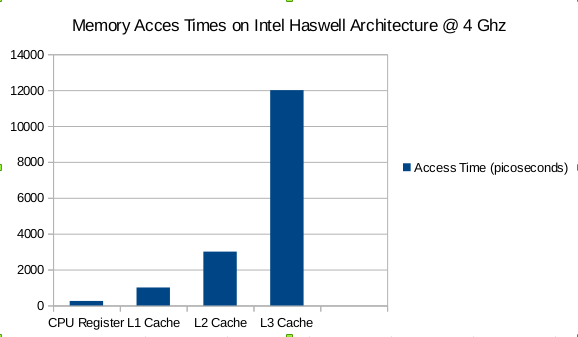

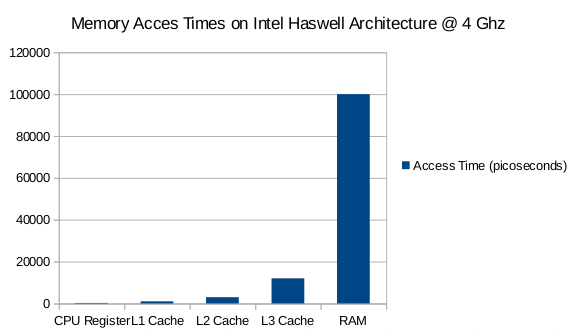

- Memory Access Times on Intel Haswell Architecture 1 Clock Cycle = 250 picoseconds @ 4 Ghz (Movement at speed of light = 7.5 cm per 250 picoseconds)

- Processor Registers

- 1 Clock Cycle (250 ps)

- Cache (L1)

- Access Time ~4-5 Clock Cycles (~ 1 ns)

- Cache (L2)

- Access Time ~12 Clock Cycles (~ 3 ns)

- Cache (L3)

- Access Time ~36-58 Clock Cycles ( ~ 12 ns)

- RAM

- Access Time ~30-60 Clock Cycles + 50-100 ns ( ~ 70 - 120 ns)

Memory Access Times

Memory Access Times (II)

Memory Hierarchy (VI)

- Storage Devices Typical Access Times

- NVMe Flash Memory ~ 6 µs (@ 150’000 IOPS)

- SAS Magnetical Disk ~ 2-3 ms (@ 350 IOPS)

- Magnetical Tape milliseconds up to many seconds

Caching

Storage Technologies - Cache Memory

- Acts as a buffer between 2 memory tiers

- Modern CPUs utilize 3 levels of caches

- Level 1 split into instruction and data cache. Separate for each core.

- Level 2 data and instruction cache. Separate for each core.

- Level 3 data and instruction cache. Shared among all cores on the die.

- Benefits both throughput and latency

- Different Caching Strategies for different purposes

Caching Strategies

- Cache Hit vs. Cache Miss

- Cache Hit Ratio

- Replacement Strategies / Associativity

- Direct Mapped

- N-Way Set Associative (But: Increased N means more potential locations need to be searched)

- Fully Associative

- Write Strategies

- Write Back

- Write Through

- Prefetching / Readahead

Caching Problems

- Larger Caches have higher hit-rate but also higher latency

- Multi Level Caches

- Data may become incoherent particularly in multiprocessor systems –> Cache Coherency Protocol

- Write Propagation

- Transaction Serialization (Reads/Writes to a memory location must be seen in the same order by all processors)

Storage Technologies - Why Caching Works –> Locality of reference

- Memory References tend to cluster.

- Program execution is sequential

- Instructions tend to be localized to a few procedures

- Iterative constructs consist of a small number of instructions

- Processing of data structures, like arrays or sequences

- Spatial locality

- Execution involves a number of memory locations that are clustered

- Acessing data locations sequentially

- Spatial locality can be exploited by using large cache blocks and prefetching

- Temporal locality

- Execution involves memory locations that have been accessed recently

- Temporal locality can be exploited by keeping recently used values in cache

- Locality of reference enables us to do caching and prefetching

- It also enables us to do branch prediction and other things, but this goes beyond the scope of this course

Caching - Example

int sizeX = 2000; int sizeY = 1000; int array[sizeY][sizeX]; // Fill the array with some data fill_buffer(&array); // Now run through the array and do something with the elements // This runs slow in C for (int x=0; x<sizeX, x++) { for (int y=0; y<sizeY; y++) { array[y][x] = x+2000*y; } } // This runs fast in C for (int y=0; y<sizeY, y++) { for (int x=0; x<sizeX; x++) { array[y][x] = x+2000*y; } }

Memory Hierarchy - Recap

- Problem: A CPU waiting for data can’t do any work

- Low Memory Access Times are crucial

- Solution: Caching/Prefetching algorithms.

- Works well with sequential data / local data access patterns

- Won’t work with totally random access patterns (Locality)

- As one goes down the hierarchy

- Increasing access time

- Decreasing throughput

- Also known as “Von Neumann Bottleneck”

Storage Technologies (I)

- I/O Devices

- Human Readable

- Machine Readable

- Communication

- Differences in

- Data Rate

- Application

- Complexity of Control

Storage Technologies (II)

- Across the different storage technologies, these relationships seem to hold:

- Faster access time, greater cost per bit

- Greater capacity, smaller cost per bit

- Greater capacity, slower access speed

Storage Technologies - Device Characteristics (I)

- Performance

- Access Time / Latency

- Throughput

- Capacity

- Raw capacity

- Storage density

- Volatility / Differentiation

- Dynamic Memory (periodic refreshes required)

- Static Memory

Storage Technologies - Device Characteristics (II)

- Mutability

- Read/Write

- Read Only

- Slow Write, Fast Read (e.g. SMR Discs)

- Accessibility

- Random Access

- Sequential Access

- Adressability

- Location addressable

- File addressable

- Content addressable

Sequential I/O vs. Random I/O

- Sequential I/O

- Writing / Reading contiguous large chunks of data (Chunk Size >= 10E6 Bytes)

- Usually the fastest way to read data from storage devices

- Not always easily applicable to a problem

- Random I/O

- Writing / Reading small chunks of data to / from random locations (Chunk Size ⇐ 10E4 Byte)

- Slowest way to read data from storage devices

- Magnitude of the slow-down depends on the underlying hard- and software (e.g. Tape-Drives vs. Flash-Drives)

Hard-Drives Overview (I)

- Invented in the mid 50s by IBM

- The first IBM drive stored 3.75 MB on a stack of 50 discs

- Had the size of two refrigerators (1.9 m³)

- Became cheap / mainstream in the late 80s

- Today one 3.5“ drive can hold up to 14TB of data

- Density ~ 1.5 Tbit / in²

- With future technologies even higer data densities may become possible

- Filling disks with helium

- Shingled Magnetic Recording

- Heat Assisted Magnetic Recording

- Interface

- Serial Attached SCSI (SAS)

- SATA

- NVMe

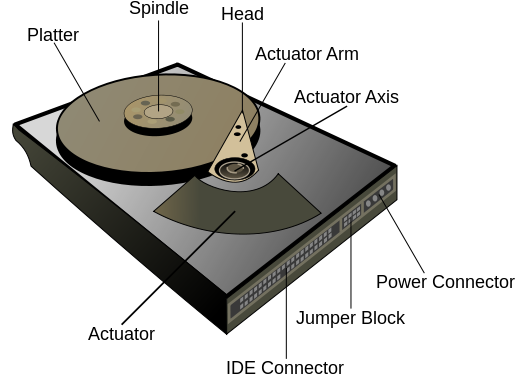

Hard-Drives Overview (II)

- Video of operating harddisk on wikipedia: https://upload.wikimedia.org/wikipedia/commons/c/c5/HardDisk1.ogv

Hard-Drives Characteristics

- Seek Time

- The time it takes to move the head to the correct track

- Rotational Delay

- The time it takes until the platter rotates to the correct position ( ~2ms @ 15’000 rpm)

- r… rotation speed in revolutions per second

- $T_{rdelay} = 1/2r$

- Transfer time calculation

- T… Transfer Time

- b… Number of bytes to be transferred

- N… Number of bytes per track

- r… rotation speed in revolutions per second

- $T = b/rN$

- Average Access Time

- $T_{access} = T_{seek} + T_{rdelay} + T_{transfer}$

Hard-Drives Sequential Access Example

- Do not rely on average values!

- Given a Harddisk with

- Rotational speed of 7’500 rpm

- 512 byte sectors

- 500 sectors per track

- We read a file that is stored on 5 adjacent tracks in 2500 sectors (1.28 MBytes)

- Average seek… 4ms

- Rotational delay… 4ms

- Read 500 sectors… 8ms

- We need to do this 5 times (1 for each track), but because of sequential organization we can skip the seek time for the consecutive tracks

- $T_{total} = 16 + (4 * 12) = 64 ms$

Hard-Drives Random Access Example

- Given a Harddisk with

- Rotational speed of 7’500 rpm

- 512 byte sectors

- 500 sectors per track

- We read a file that is distributed randomly over 2500 sectors of the disk

- Average seek… 4ms

- Rotational delay… 4ms

- Read 1 sector… 0.016ms

- We need to do this 2500 times with a seek after each sector

- $T_{total} = 2'500 * 8.016 = 20'040 ms = 20.04s$

- Slowdown of nearly 3 orders of magnitude with the same average values

- Do not rely on average values!

Quo vadis Hard-Drives

- Current/Future Trends

- Helium filled drives

- Shingled Magnetic Recording

- Heat assisted recording

(NVMe) Solid State Drives (I)

- NAND Flash Memory

- Lower cost compared to DRAM

- No refresh cycles / external PSU needed to retain the data compared to DRAM

- Uses less space compared to memory based on NOR gates

- No random access (Cells are connected in series to sectors)

- Key components

- Controller

- Memory

- New Interfaces

- SATA Express

- NVM Express

(NVMe) Solid State Drives (II)

- NAND Flash Memory

- Single Level Cell (SLC)

- Multi Level Cell (MLC)

- SLC

- 1 bit per cell

- ~10 times longer life than MLC

- Lower latency than MLC

- MLC

- multiple bits per cell

- Lower production cost

(NVMe) Solid State Drives - Memory (I)

- NAND Flash Memory

- Is composed of one or more chips

- Chips are segmented into planes

- Planes are segmented into thousands (e.g. 2048) of blocks

- And 1 or 2 registers of the page size for buffering

- A Block usually contains 64 to 128 pages

- Each page has a data part (few KBytes)

- and a small metadata area (e.g. 128 bytes) for Error Correcting Code

- Exact specification varies across different memory packages

(NVMe) Solid State Drives - Memory (II)

- Read

- Is performed in units of pages

- 1 page takes approx. 10 µs (SLC) up to 100 µs (MLC) to read

- Write

- Is performed in units of pages

- Some controllers support sub-page operations

- Pages in one block must be written sequentially

- A page write takes approximately 100 µs (SLC) up to 900µs (MLC)

- Block must be erased before being reprogrammed

(NVMe) Solid State Drives - Memory (III)

- Erase

- Must be performed in block granularity (hence the term erase-block)

- Can take up to 5 ms

- Limited number of erase cycles

- SLC: 100’000

- MLC: 10’000

- Some flash memory is reserved to replace bad blocks

- Controller takes care of wear leveling

(NVMe) Solid State Drives - Controller (I)

- Higher complexity of access compared to hard-disks

- High performance SSDs consists of hundreds of dies with parallel communication paths

- Data cells may wear out and become bad

- Erase size != Page size

- We need a controller to handle this:

- Does the initial setup

- Format the memory

- Mark bad blocks

- Moves data and picks blocks so that single cells don’t wear out

- Parallelizes read/write/erase operations on many dies

- Adresses in Blocks/Pages

- Flash Transition Layer and Logical to Physical Mapping

- Garbage Collection

(NVMe) Solid State Drives - Speed (I)

- Besides the additional complexity, SSDs are much faster than traditional disks

- Read Latency is in the order of few microseconds (We had milliseconds with HDDs)

- No need to move the head around

- But because of the arrangement in blocks and pages, sequential access is still faster

- More IOPS because of the lower latency

- Needs more CPU utilization to get full throughput

- Needs some pressure (multiple calls) to fully parallelize read/write calls

- This is also called ‘Queue Depth’

(NVMe) Solid State Drives - IOPS vs. Throughput

- Traditional discs have been measured in terms of throughput

- SSDs are sometimes measured in terms of IOPS (Input- Output- Operations per Second)

- The following equation holds (if there is no controller overhead like erasing, garbage collection, etc.):

- $Throughput = IOPS * Blocksize$

- Where blocksize can be chosen freely

- So if we know the blocksize that was used in benchmarking…

- We can calculate IOPS from throughput and vice versa

- (If we assume that the disk was empty at the beginning of the benchmark and no additional controller overhead was involved)

- We now even have a base to calculate which blocksize is at least necessary to get full write throughput

(NVMe) Solid State Drives - Speed (II)

- Given an Intel DC P3700 SSD with a capacity of 2 TB, specification says:

- Sequential Read 450’000 IOPS with 2800MB/s of max throughput

- $2'800'000'000 Bytes = 450'000 * x$

- $x = 6222 Bytes ~ 6KByte$

- So a blocksize of 8 KByte is a good starting point to try to get full throughput

- Random Reads with a page size of 8KByte should work well on this device and we should get the full throughput of 2800MB/s

- How would a traditional HDD perform under these conditions?

(NVMe) Comparison SSD vs. HDD

- In the slide before, we proposed that on our SSD with a block size of 8 KByte will lead to a throughput of 2800MB/s

- Let’s try this with a HDD

- Rotational speed of 7’500 rpm

- 512 byte sectors

- 500 sectors per track

(NVMe) Comparison SSD vs. HDD

- We read a file that is distributed randomly over 2500 sectors of the disk in blocks of 8KByte (Blocks of 16 sectors)

- Average seek… 4ms

- Rotational delay… 4ms

- Read 16 sectors… 0.256ms

- We need to do this 156.25 times with a seek after every block of 16 sectors

- $T_{total} = 156.25 * 8.256 = 1290 ms = 1.290 s$

- We transferred 156.25 * 8 KBytes = 1250 KBytes in 1.290 s

- This equals ~ 1 MByte per second (SSD 2800 MByte per second)

- With the same block size our benchmark will run ~2800 times faster on SSD than on HDD

- When utilised in sequential form, HDDs are still 20-30 times slower than high performance SSDs

(NVMe) Solid State Drives - Speed (II)

- $Transfertime = T_{controller} + T_{transfer}$

Quo vadis Solid State Drives

- Current / Future Trends

- Flash Memory Market surpassed DRAM Market in 2012

- Density of Flash Memory surpassed Hard Drive density in 2016

- In Q4 2016 45 million SSDs with a total capacity of 16 Exabyte were delivered to customers

- Market for HDDs is significantly bigger than for SSDs

- New memory technologies (e.g. Intel/Micron 3DXPoint)

- Intel Optane Memories

- Things to consider

- Will SSDs replace all HDDs in the future?

- All Flash SDS

- Storage as Memory

- Everything except archive

- What use cases remain for HDDs?

Magnetic Tapes (I)

- Have been in use for data storage since the 50’s

- Main storage medium in some early computers

- Capacity:

- 1950’s: ~ 1 MByte

- 1980’s: ~ 100 MByte - 1 GByte

- 1990’s: ~ 10 - 100 GByte

- 2000’s: ~ 100 GByte - 1 TByte

- Now: >10 TByte

- Future: Going up to 200 TByte per Tape seems possible

- Real tape capacity is usually 1/2 of the given capacity

- A compression ratio of 2:1 is quietly assumed

Magnetic Tapes - Characteristics (I)

- Tape width

- Half inch has been the most common width

- Recording Method

- Linear

- Linear Serpentine

- Scanning (writing across the width of the tape)

- Helical Scan (Short, dense, diagonal tracks)

- Block Layout

- Data is written in blocks, each block is a single operation

Magnetic Tapes - Characteristics (II)

- Access

- Sequential Access

- Index database or Tape Marks

- Compression

- Data is usually compressed when written on tape

- Encryption

- Data encryption is possible and standardised

- Encryption needs to be done after compression

- Why?

Magnetic Tapes - Characteristics (II)

- Access

- Sequential Access

- Index database or Tape Marks

- Compression

- Data is usually compressed when written on tape

- Encryption

- Data encryption is possible and standardised

- Encryption needs to be done after compression

- Encrypted data should look like random noise

- If your encrypted data is compressible then your encryption is flawed

Magnetic Tapes - LTO

- Linear Tape Open

- Open standard to store data on magnetic tapes

- Developed in the 90’s

- Eigth Generation LTO was released in 2017

- LTO-8 State of the art

- 12 TByte raw capacity (uncompressed)

- 360 MByte/s max uncompressed speed

- Compression ratio 2.5:1

- Supports encryption

- Supports WORM (Write once read many)

Magnetic Tapes - No random I/O

- The loading and winding of the tape takes a long time (up to many seconds)

- Tape moves at a constant speed

- Modern tape machines can move at 1/2 or 1/4 speed

- Shoe shining happens when there is not enough data to write

- Wears out the tape

- Doing random reads wears out the tape

- Expected durability of a tape is about 10^4 end-to-end passes

Memory Hierarchy - cont’d

- With the given storage technologies (Flash, HDD and Tape) we can refine our Memory hierarchy

- Using Flash Memories for Caching and Metadata

- Using HDDs as online data storage

- Using Tapes as offline data storage

- With the concept of locality and the right applications this will speed up our storage stack

- Recently random accessed files and Metadata that fits on the SSDs will be stored on the SSDs

- Recently sequential acessed files will be moved to the HDDs (They are fast enough when used in a sequential way)

- Rarely acessed files go to the tape machine

- We could even link these files transparently into the file system, so the user doesn’t have to know anything about where his data is located

Multi Tiered Storage Systems

- Different Tiers of Storage e.g.

- Tier 1: SSDs

- Tier 2: SATA HDDs

- Tier 3: Tape Storage

- But there’s no free meal

- We need to store data about where our data is stored (More on metadata later on) –> Memory Overhead

- If a user accesses a file, we need to check where the file is –> Processing Overhead

- In the worst case we have to copy it from tape to the disk

- which means loading the right tape and winding to the correct position

- which also means that the user might think, that the storage is very slow today

Gaining speed and redundancy with RAID

- RAID - Redundant Array of Independent (Inexpensive) Discs

- Distribute data across several drives

- Add parity calculation or mirroring for redundancy

- Different Techniques / RAID Levels available

- RAID 0, 1, 5, 6, 10, 01, …

- Several Discs in a RAID are called a RAID array

RAID - Striping and Mirroring

- RAID Level 0

- Data is striped across drives without redundancy

- Failure of one disc leads to loss of all data in the array

- Speedup is proportional to the number of discs

- RAID Level 1

- Data is mirrored across discs (usually 2, but more are possible)

- Failure of a disc involves no loss of data

- Read calls can be parallellized to multiple discs

- No speedup for write calls

RAID - Parity

- RAID Level 5

- Data is striped across drives, parity is calculated (XOR) and stored

- Block level striping

- Failure of one disc can be compensated

- Failure of more than one discs leads to data loss

- Considered obsolete nowadays, because of long rebuild times

- Use RAID-6

- RAID Level 6

- Data is striped across drives, parity is calculated and stored

- 2 different syndromes are computed to allow the loss of up to two drives

- Additional computation needed (no simple XOR for the second syndrome)

RAID - Other Levels

- “Hybrid”-RAID

- Mirroring between SSD and HDD (special controller needed)

- Nested-RAID

- RAID-10

- Stripe of Mirrors

- RAID-01

- Mirror of Stripes

- Most probably RAID-10 is the better choice than RAID-01

- Improved fault tolerance

But where are the levels 2 - 4 ?

- RAID2 - RAID4 are obsolete

- RAID2

- Bit level striping with dedicated parity disc

- RAID3

- Byte level striping with dedicated parity disc

- RAID4

- Block level striping with dedicated parity disc

File Management

- Abstraction of the secondary storage

- After all we don’t want to cope with the internals of storage devices

- Abstraction to Files / Directories –> File System

- Files

- Long-term existence

- Sharable between processes

- Structure

- Files can have internal structure (e.g. databases)

- Operations

- Create

- Delete

- Open

- Close

- Read

- Write

File Management - Inodes (I)

- Besides the file content, filesystems rely on data structures about the files

- Also called Metadata

- Inodes store information about files

- Type of File (File, Directory, etc)

- Ownership

- Access Mode / Permissions

- Timestamps (Creation, Access, Modification)

- Last state change of the inode itself (status, ctime)

- Size of the file

- Link-Counter

- One or more references to the actual data blocks

File Management - Inodes (II)

- Most filesystems use a fixed amount of inodes

- Ext2 filesystem can address up to 12 blocks per inode

- If more blocks are needed for a file a new inode is allocated (indirect block)

- Ext2 allows up to triple nested indirect blocks which leads to a maximum size of 16 GiB to 4 TiB depending on block-size

- Appending to files and writing new files is not possible when inodes run out

- Depending on the amount of files/directories a filesystem can use up to 10% of its capacity for meta information

- Show Inode Number with ‘ls -i’

- Show content with stat

File Management - Inodes (III)

* sreinwal@rs ~/Work/vboxshared/sreinwal/pandoc/vsc-markdown/parallell-io/02_storage_technologies $ stat storage_technologies.md * File: storage_technologies.md * Size: 30837 Blocks: 64 IO Block: 4096 regular file * Device: fd04h/64772d Inode: 25373850 Links: 1 * Access: (0644/-rw-r--r--) Uid: ( 1001/sreinwal) Gid: ( 1001/sreinwal) * Access: 2017-11-28 14:25:11.191823770 +0100 * Modify: 2017-11-28 14:23:53.482827520 +0100 * Change: 2017-11-28 14:25:20.416823325 +0100 * Birth: -

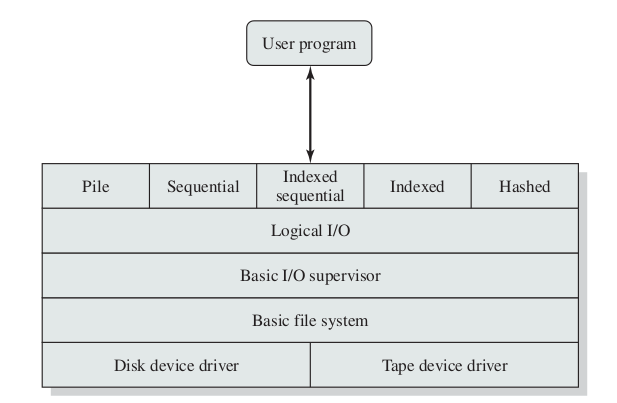

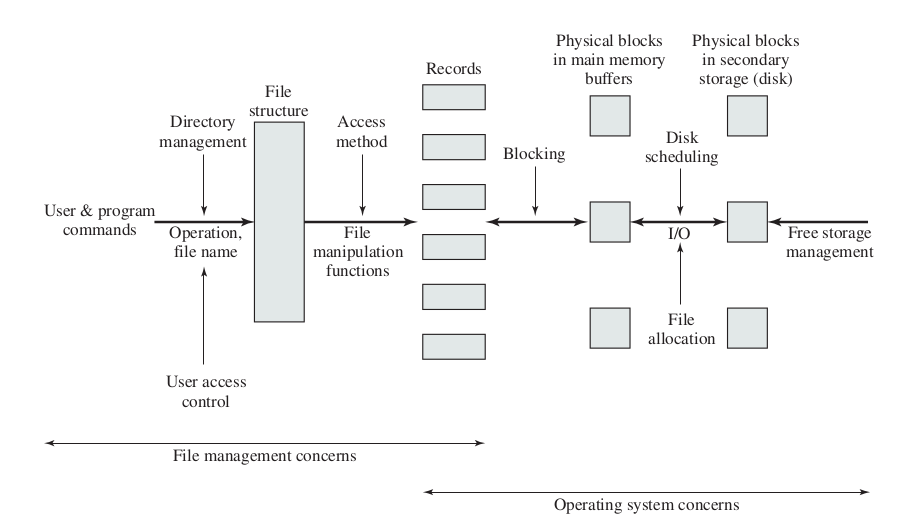

File System - Organization (I)

File System - Organization (II)

- Device Drivers / Hardware Layer

- Dealing with I/O operations on the devices

- Usually considered part of the operating system

- Basic File System / Physical IO Layer

- Deals with blocks of data, that are exchanged with device drivers

- Manages placement of blocks and the buffering of these blocks in main memory

File System - Organization (III)

- Basic I/O supervisor / Basic I/O Layer

- Responsible for file I/O and its termination

- Control structures are maintained to deal with device I/O, scheduling and file status

- Disk-Scheduling to optimize performance

- Logical I/O Layer

- Provides general purpose file I/O

- Maintains basic data about files

- Enables users/applications to access files

File System - Organization (IV)

Unix File Management

- 6 different types of files

- Regular

- Contains data. Simple stream of bytes.

- Directory

- Contains list of file names plus pointers to their index nodes

- Special

- Map physical devices to files (e.g. /dev/sda for the first SAS/SATA disc)

- Named pipes

- Buffers received data

- Enables interprocess communication

- FIFO (First-In-First-Out)

- Links

- Alternative filenames for existing files

- Symbolic Links

- Basically an ordinary file that contains the name of the file it is linked to

Linux Virtual File System

- Different File Systems under Linux

- ext2, ext3, ext4, reiserfs, xfs, nfs, …

- VFS provides a virtual file system

- Presents a single, uniform interface to user processes

- Mapping between VFS and underlying file system

Storage Networks - NAS vs. SAN

- NAS - Network Attached Storage

- Centralized File Storage

- Exporting File-Systems for clients to mount

- Connected via Network (Ethernet, Infiniband, …)

- File System Layers of the server are used

- User/Access Management at the server

- SAN - Storage Area Network

- Centralized Device Array

- Exports Block devices

- Connected via Fibrechannel

- ISCSI Protocol, NVMoF Protocol

- Client is responsible for handling file systems on the block device

- No User/Access Management at block level

Distributed File Systems - NFSv4

- NFSv4

- Network File System Version 4

- Client Server Architecture

- Provides a standardized view of its local filesystem

- Allowing a heterogenous collection of processes to share a common filesystem

- Allows sharing of all local filesystems as well as other network filesystems

- VFS layer comes in handy here

- NFS4 uses Remote File Service / Remote Access Model

- File stays on the server

- Locking Mechanisms (Stateful Model)

- NFSv2 and NFSv3 were stateless which led to some problems (Separate Locking daemon needed)

- Opposed to the Upload/Download Model

- Client downloads file, does operations locally, reuploads the file

Client Side Caching - Asynchronous I/O

- Synchronous or Blocking I/O

- Process issues an I/O call to the operating system and waits for the operation to complete

- Leads to intervals where the CPU is completely idle waiting for the operation to finish

- Asynchronous or Non-Blocking I/O

- As we have seen, I/O Operations can be very slow

- Client calls the operating systems for a read/write

- Continues processing

- Data is read/written in the background

- Operating system informs the process when the file is written

- Client side caching in NFS

- async mount option

- data is cached on the client

Server Side Caching - NFSv4

- Server Side Caching

- Server replies to requests before they have been committed to stable storage

- Usually less error prone than client side caching, because servers are usually in a more controlled environment than clients

- e.g. Uninterruptible Power Supply, BBU Units on RAID controllers, etc.

- Problems arise

- Server crashes before data is transferred from client to server

- Client crashes before it transfers data to server

- Network connection breaks

- Power outages

- etc…

Parallell File Systems - BeeGFS

- FhGFS / BeeGFS Parallell File System

- Distributes data and metadata across serveral targets

- Stripes data across several targets

- Huge speedup compared to single server appliances

- Scalability and Flexibility were key aspects for the design

BeeGFS - Management Server

- Management Server

- Exactly 1 MS per filesystem

- Keeps track of metadata and storage targets

- Keeps track of connected clients

- Tags targets with labels

- Normal

- Low

- Critical

- Not involved in filesystem operations

BeeGFS - Metadata Server

- Holds the Metadata information of the file system

- Should be stored on fast storage devices (e.g. Flash Memories)

- Metadata accesses are mostly tiny random accesses

- Ext4 seems to be the fastest filesystem to store beegfs metadata

- Additional servers can be added on demand

- Each server holds exactly one metadata target

- TargetChooser

- Chooses targets according to the configuration when a user writes a file

- Holds metadata information

- File / Directory information

- Creation date

- Striping pattern

- Affected Storage Targets

- Permissions

- ACLs

- Extended attributes

- …

BeeGFS - Metadata Server

- Entry point of the file system ‘/’ is located on MDS #1

- Defined entry point in the directory tree

- Additional directories are assigned random to other MDS

- Leads to effective load balancing on the metadataservers

- As long as there are significantly more directories than metadata targets

- Client walks down the directory tree to find the responsible MDS for a directory

- Handling Meta-Data requests is creating some load on the cpu cores

- For a small number of requests higher clock speeds lead to less latency

- For a high number of requests multiple CPU Cores are needed

- Metadata handling will create processes which are waiting for data

- CPU overcommitting could increase performance

BeeGFS - Object Storage Server

- Holds the file contents on Object Storage Targets (OSTs)

- Underlying devices / filesystem can be chosen freely

- As long as the file system is POSIX compliant

- File contents can be striped over multiple OSTs

- One server can handle multiple OSTs

- A typical OST consists of 6 - 12 drives running in RAID6

- Number of threads influence the number of requests that can be put on disk

- Higher number of threads will lead to randomization of sequential data

- We have to live with that to a certain extent in multi user environments

- Chunksize sets the amount of data stored on a OST per stripe

- OSTs can be added on demand. But a rebalancing of the file system might be needed to gain full performance

BeeGFS - Clients

- Clients can be added on demand

- Thousands of clients is no problem as long as there are enough MDTs and OSTs available

- tuneFileCacheType - Client caching is supported

- Buffered Mode

- Uses a pool of static buffers for write-back and read-ahead

- Caches up to a few hundred kilobytes of a file

- Default Mode

- Native Mode

- Uses the linux kernel page cache

- May cache up to multiple gigabytes of data (depending on the available RAM)

- Experimental / Work in progress

Theres much more

- Different Storage Devices

- Optical

- Laserdisc / CD-Rom / DVD / Blu-Ray

- Magnetic

- Floppy / ZIP Drives

- Magneto-Optical

- Minidisc (Sony)

- Different Parallell File Systems

- GPFS

- GlusterFS

- Lustre

- Different use-cases

- Storage Area Network

- Different architectures

- HP - The Machine

Storage Technologies - Bibliography

- Understanding Intrinsic Characteristics and System Implications of Flash Memory Based Solid State Drives, Cheng et al., 2009

- Operating Systems - Internals and Design Principles 7th Edition, Stallings, 2012

- Distributed Systems - Principles and Paradigms 2nd Edition, Tanenbaum et al., 2007

Storage Technologies - The End

- Remember

- Random I/O is magnitudes slower than sequential I/O

- Sequential I/O can even be done in parallell from multiple nodes to further improve the throughput

- Highly parallellized Random calls will result in degraded storage performance for ALL users and can even lead to an unresponsive storage.

- Don’t do random I/O on storage devices

Thank you for your attention