This version (2020/10/20 09:13) is a draft.

Approvals: 0/1

Approvals: 0/1

Storage Infrastructure of VSC2 and VSC3

- Motivation

- Know how a HPC storage stack can be integrated into a supercomputer

- and gain some additional insights

- Know the hard- and software to harness its full potential

VSC2 - Basic Infos

- VSC2

- Procured in 2011

- Installed by MEGWARE

- 1314 Compute Nodes

- 2 CPUs each (AMD Opteron 6132 HE, 2.2Ghz)

- 32 GB RAM

- 2x Gigabit Ethernet

- 1x Infiniband QDR

- Login Nodes, Head Nodes, Hypervisors, etc…

VSC2 - File Systems

- File Systems on VSC2

- User Homes (/home/lvXXXXX)

- Global (/fhgfs)

- Scratch (/fhgfs/nodeName)

- TMP (/tmp)

- Change Filesystem with ‘cd’

- cd /global # Go into Global

- cd ~ # Go into Home

- Use your applications settings to set in/out directories

- Pipe the output into your home with ‘./myApp.sh 2>&1 > ~/out.txt’

VSC - Fileserver

VSC - Why different Filesystems

- Home File Systems

- BeegFS (FhGFS) was very slow when it came to small file I/O

- Leads to severe slowdown when compiling applications and/or

- working with small files in general

- We do not recommend using small files. Use big files when possible, however users want to

- Compile Programs

- Do some testing

- Write (small) Log Files

- etc…

VSC - Why different Filesystems

- Global

- Parallell Filesystem (BeeGFS)

- Perfectly suited for large sequential transfers

- TMP

- Uses the main memory (RAM) of the server. Up to 1/2 size of the whole memory.

- Users can access it like a file system but they get the speed of a byte-wise addressable storage

- Random I/O is blindingly fast

- But comes at the price of main memory

- If you are not sure how to map your file from GLOBAL/HOME into memory just copy it to TMP and you are ready to go

VSC - Why different Filesystems

- Small I/Os

- Random Access –> TMP

- Others –> HOME

- Large I/Os

- Random Access –> TMP

- Sequential Access –> GLOBAL

VSC2 - Storage Homes

- VSC2 - Homes

- 6 File Servers for Home

- 2 CPUs each (Intel Xeon E5620 @ 2.40 Ghz - Westmere)

- 48 GB RAM

- 1x Infiniband QDR

- LSI Raid Controller

- Homes are Stored on RAID6 Volumes

- 10+2 Disks per Array. Up to 3 Arrays per Server (Depends on usage)

- Exported via NFS to the compute nodes (No RDMA)

- Each project resides on 1 server

VSC2 - Storage Global

- VSC2 - Global

- 8 File Servers

- 2 CPUs each (Intel Xeon E5620 @ 2.40 Ghz - Westmere)

- 192 GB RAM

- 1x Infiniband QDR

- LSI Raid Controller

- OSTs consist of 24 Disks (22+1p+1Hot-Spare RAID5)

- One Metadata Target per Server

- 4x Intel X25-E 64 GB SSDs

- Up to 6000 MB/s throughput

- ~ 160 TB capacity

VSC2 - Storage Summary

- 14 Servers

- ~ 400 spinning disks

- ~ 25 SSDs

- 2 Filesystems (Home+Global)

- 1 Temporary Filesystem

VSC3 - Basic Infos

- Procured in 2014

- Installed by CLUSTERVISION

- 2020 Nodes

- 2 CPUs each (Intel Xeon E5-2650 v2 @ 2.60 Ghz)

- 64 GB RAM

- 2x Infiniband QDR (Dual-Rail)

- 2x Gigabit Ethernet

- Login Nodes, Head Nodes, Hypervisors, Accelerator Nodes, etc…

VSC3 - File Systems

- User Homes (/home/lvXXXXX)

- Global (/global)

- Scratch (/scratch)

- EODC-GPFS (/eodc)

- BINFS (/binfs)

- BINFL (/binfl)

VSC3 - Home File System

- VSC3 - Homes

- 9 Servers (Intel Xeon E5620 @ 2.40 Ghz)

- 64 GB RAM

- 1x Infiniband QDR

- LSI Raid Controller

- Homes are stored on RAID6 Volumes

- 10+2 Disks per Array. Up to 3 Arrays per Server (Depends on usage)

- Exported via NFS to the compute nodes (No RDMA)

- 1 Server without RAID Controller running ZFS

- Quotas are enforced

VSC3 - Global File System

- VSC3 - Global

- 8 Servers (Intel Xeon E5-1620 v2 @ 3.70 Ghz)

- 128 GB RAM

- 1x Infiniband QDR

- LSI Raid Controller

- 4 OSTs per Server

- 48 Disk 4x(10+2p)

- 1 Metadata Target per Server

- 2 SSDs each (Raid-1 / Mirrored)

- Up to 20’000 MB/s throughput

- ~ 600 TB capacity

VSC3 - GPFS

- IBM GPFS - General Parallell Filesystem / Elastic Storage / Spectrum Scale

- Released in 1998 as multi media filesystem (mmfs)

- Linux support since 1999

- Has been in use on many supercomputers

- Separate Storage Pools

- CES - Cluster Export Services

- Can be linked to a hadoop cluster

- Supports

- Striping

- n-way Replication

- Raid (Spectrum Scale Raid)

- Fast rebuild

- …

VSC3 - EODC Filesystem

- VSC3 <–> EODC

- 4 IBM ESS Servers (~350 spinning disks each)

- Running GPFS 4.2.3

- 12 Infiniband QDR Links

- Up to 14 GB/s sequential write and 26 GB sequential read

- Multi tiered (IBM HSM)

- Least recently used files are written to tape

- Stub File stays in the file system

- Transparent recall after access

- Support for schedules, callbacks, Migration Control, etc.

- Sentinel Satellite Data

- Multiple petabytes

- Needs backup on different locations

VSC3 - EODC Filesystem

- VSC3 is a “remote cluster” for the EODC GPFS filesystem

- 2 Management servers

- Tie-Breaker disk for quorum

- No filesystems (only remote filesystems from EODC)

- Up to 500 VSC clients can use GPFS in parallell

VSC3 - Bioinformatics

- VSC3 got a “bioinformatics” upgrade in late 2016

- 17 Nodes

- 2x Intel Xeon E5-2690 v4 @ 2.60 Ghz (14 cores each / 28 with hyperthreading)

- Each node has at least 512 GB RAM

- 1x Infiniband QDR (40 Gbit/s)

- 1x Omnipath (100 Gbit/s)

- 12 spinning disks

- 4 NVMe Memories (Intel DC P3600)

- These Nodes export 2 filesystems to VSC (BINFL and BINFS)

VSC3 - Bioinformatics

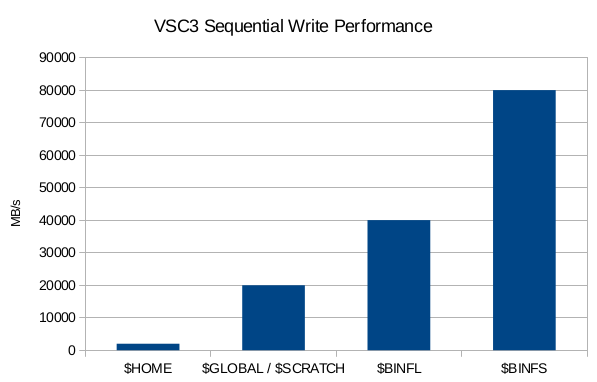

VSC3 - BINFL Filesystem

- Use for I/O intensive bioinformatics jobs

- ~ 1 PB Space (Quotas enforced)

- BeeGFS Filesystem

- Metadata Servers

- Metadata on Datacenter SSDs (RAID-10)

- 8 Metadata Servers

- Object Storages

- Disk Storages configured as RAID-6

- 12 Disks per Target / 1 Target per Server / 16 Servers total

- Up to 40 Gigabyte/second write speed

Binfs

- Use for very I/O intensive jobs

- ~ 100 TB Space (Quotas enforced)

- BeeGFS Filesystem

- Metadata Servers

- Metadata on Datacenter SSDs (RAID-10)

- 8 Metadata Servers

- Object Storages

- Datacenter SSDs are used insteand of traditional disks.

- No redundancy. See it as (very) fast and low-latency scratch space. Data may be lost after a hardware failure.

- 4x Intel P3600 2TB Datacenter SSDs per Server

- 16 Storage Servers

- Up to 80 Gigabyte/second via OmniPath Interconnect

VSC3 - Storage Summary

- 33 Servers

- ~ 800 spinning disks

- ~ 100 SSDs

- 5 Filesystems (

- Home

- Global

- EODC

- BINFS

- BINFL

- Temporary Filesystem

Storage Performance

Temporary Filesystems

- Use for

- Random I/O

- Many small files

- Data gets deleted after the job

- Write Results to $HOME or $GLOBAL

- Disadvantages

- Disk space is consumed from main memory

- Alternatively the mmap() system call can be used

- Keep in mind, that mmap() uses lazy loading

- Very small files waste main memory (memory mapped files are aligned to page-size)

Addendum: Backup

- Only for disaster recovery we keep some backups

- Systems (Node Images, Head Nodes, Hypervisors, VMs, Module Environment)

- via rsnapshot

- Home

- Home filesystems are backupped

- via self written, parallellized rsync script

- Global

- Metadata is backupped. But if a total disaster happens this won’t help.

- Altough nothing happenend for some time, this is high performance computing and redundancy is minimal

- Keep an eye on your data. If it’s important you should backup it yourself.

- Use rsync

Addendum: Big Files

- What does an administrator mean with ‘don’t use small files’?

- It depends

- On /global fopen –> fclose takes ~100 microseconds

- On VSC2 Home Filesystems > 100 Million Files are stored.

- A check which files have changed takes more than 12 hours. Without even reading file contents or copying.

- What we mean is: Use reasonable file sizes according to your working set and your throughput needs

- Reading a 2 GB File from SSD takes less than one second. Having files which are a few MB in size, will slow down your processing.

- If you need high throughput with your >1TB working set a file size >=10E8 bytes is reasonable

- File Sizes < 10E6 bytes are problematic if you plan to use many files

- If you want to do random i/o copy your files to TMP.

- Storage works well when the blocksize is reasonable for the storage system (on VSC3 a few Megabytes are enough)

- Do not create millions of files

- If every user had millions of files we’d run into some problems

- Use ‘tar’ to archive unneeded files

- tar -cvpf myArchive.tar myFolderWithFiles/

- tar -cvjpf myArchive.tar myFolderWithFiles/ # Uses bzip2 compression

- Extract with

- tar xf myArchive.tar

The End

Thank you for your attention